Securely Connect MongoDB to Cloud-Offered Kubernetes Clusters

Rate this tutorial

Containerized applications are becoming an industry standard for virtualization. When we talk about managing those containers, Kubernetes will probably be brought up extremely quickly.

Kubernetes is a known open-source system for automating the deployment, scaling, and management of containerized applications. Nowadays, all of the major cloud providers (AWS, Google Cloud, and Azure) have a managed Kubernetes offering to easily allow organizations to get started and scale their Kubernetes environments.

Not surprisingly, MongoDB Atlas also runs on all of those offerings to give your modern containerized applications the best database offering. However, ease of development might yield in missing some critical aspects, such as security and connectivity control to our cloud services.

In this article, I will guide you on how to properly secure your Kubernetes cloud services when connecting to MongoDB Atlas using the recommended and robust solutions we have.

You will need to have a cloud provider account and the ability to deploy one of the Kubernetes offerings:

And of course, you'll need a MongoDB Atlas project where you are a project owner.

If you haven't yet set up your free cluster on MongoDB Atlas, now is a great time to do so. You have all the instructions in this blog post. Please note that for this tutorial you are required to have a M10+ cluster.

Atlas connections, by default, use credentials and end-to-end encryption to secure the connection. However, building a trusted network is a must for closing the security cycle between your application and the database.

No matter what cloud of choice you decide to build your Kubernetes cluster in, the basic foundation of securing that deployment is creating its own network. You can look into the following guides to create your own network and gather the main information (Names, Ids, and subnet Classless Inter-Domain Routing - CIDR).

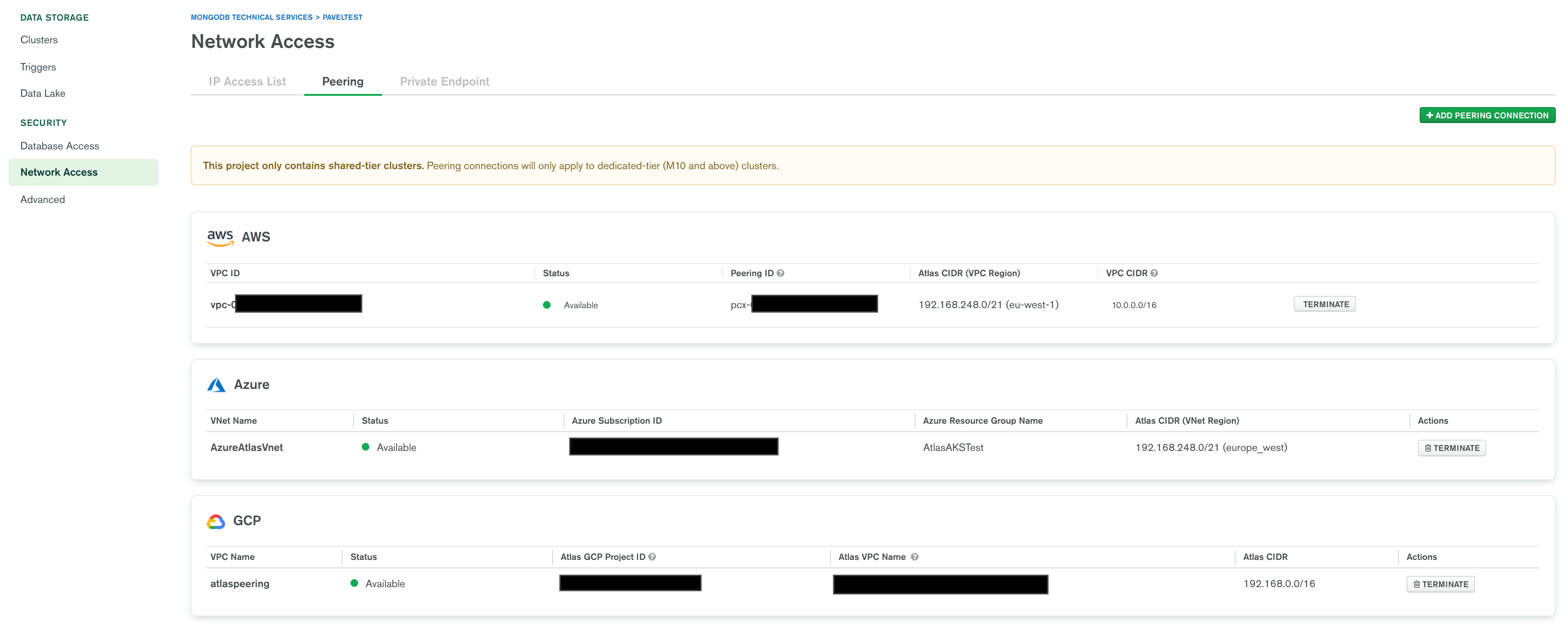

Now, we'll configure connectivity of the virtual network that the Atlas region resides in to the virtual network we've created in Step 1. This connectivity is required to make sure the communication between networks is possible. We'll configure Atlas to allow connections from the virtual network from Step 1.

This process is called setting a Network Peering Connection. It's significant as it allows internal communication between networks of two different accounts (the Atlas cloud account and your cloud account).

However, I will highlight the main points in each cloud for a successful peering setup:

| AWS | GCP | Azure |

|---|---|---|

|

|

|

The Kubernetes clusters that we launch must be associated with the

peered network. I will highlight each cloud provider's specifics.

When we launch our EKS via the AWS console service, we need to configure

the peered VPC under the "Networking" tab.

Place the correct settings:

- VPC Name

- Relevant Subnets (Recommended to pick at least three availability zones)

- Choose a security group with open 27015-27017 ports to the Atlas CIDR.

- Optionally, you can add an IP range for your pods.

When we launch our GKE service, we need to configure the peered VPC under the "Networking" section.

Place the correct settings:

- VPC Name

- Subnet Name

- Optionally, you can add an IP range for your pod's internal network that cannot overlap with the peered CIDR.

When we lunch our AKS service, we need to use the same resource group as the peered VNET and configure the peered VNET as the CNI network in the advanced Networking tab.

Place the correct settings:

- Resource Group

- VNET Name under "Virtual Network"

- Cluster Subnet should be the peered subnet range.

- The other CIDR should be a non-overlapping CIDR from the peered network.

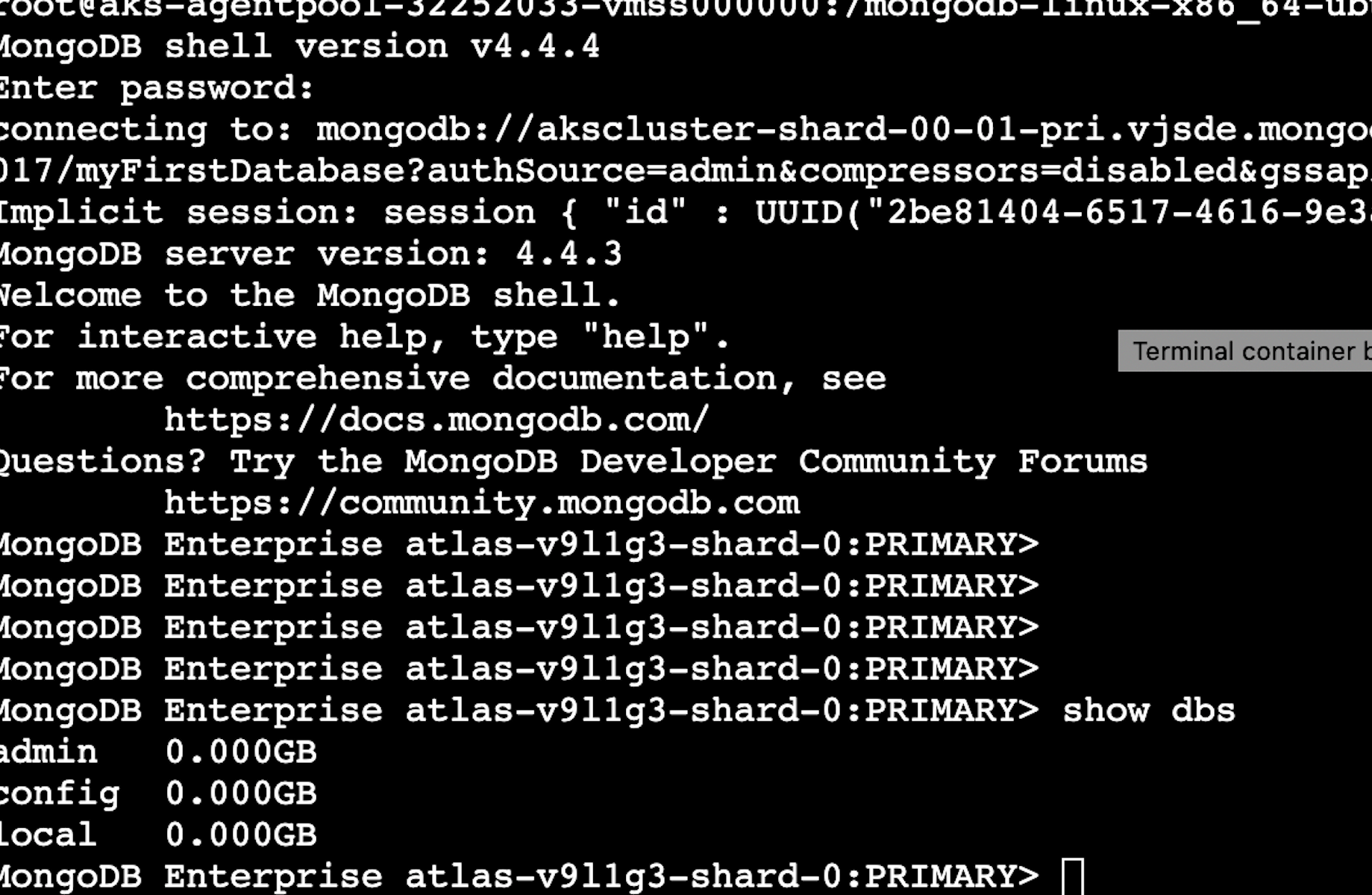

Once the cluster is up and running in your cloud provider, you can test the connectivity to our peered cluster.

First, we will need to get our connection string and method from the Atlas cluster UI. Please note that GCP and Azure have private connection strings for peering, and those must be used for peered networks.

Now, let's test our connection from one of the Kubernetes pods:

Kubernetes-managed clusters offer a simple and modern way to deploy containerized applications to the vendor of your choice. It's great that we can easily secure their connections to work with the best cloud database offering there is, MongoDB Atlas, unlocking other possibilities to build incredible applications.

If you have questions, please head to our developer community website where the MongoDB engineers and the MongoDB community will help you build your next big idea with MongoDB.