Amazon DocumentDB is built on top of AWS’s custom Aurora platform, which has historically been used to host relational databases. While Aurora’s storage layer is distributed, its compute layer is not, limiting scaling options.

Scaling DocumentDB

DocumentDB supports a single primary node for writes and up to 15 replicas within a single Amazon region. While you can use replicas to scale read operations to DocumentDB (with limits, which we discuss below), there is no native capability to scale write operations beyond the single primary node. Newly introduced Global Clusters still have this same limitation, forcing all writes to go through a single node and therefore leaving the database still vulnerable to outages if the region hosting that primary node is unavailable. Recovery is a manual process, requiring application reconfiguration.

This inhibits the ability to scale applications as user populations and data volumes grow, or serve applications that need to be distributed to serve global audiences with a consistent, low latency experience. Instead, developers must manually shard the database at the application level, which adds development complexity and inhibits the ability to elastically expand and contract the cluster as workloads dictate.

DocumentDB tops out at a maximum database size of 64TB and 30,000 concurrent connections per instance.

How MongoDB Atlas is Different

Scale Out: Sharding

To meet the needs of apps with large data sets and high throughput requirements, MongoDB provides horizontal scale-out for databases on low-cost, commodity hardware or cloud infrastructure using a technique called sharding. Sharding automatically partitions and distributes data across multiple physical instances called shards.

Sharding allows developers to scale the database seamlessly as their apps grow beyond the hardware limits of a single primary, and it does this without adding complexity to the application: sharding is transparent, even when committing ACID transactions across shards. Whether there are one or a thousand shards, the application code for querying MongoDB remains the same. To respond to workload demand, nodes can be added or removed from the cluster in real time, and MongoDB will automatically rebalance the data accordingly, without manual intervention and without downtime. Live resharding enables database administrators to change the sharding strategy in real time, without database downtime. Starting at MongoDB 7.0, the shard key advisor commands provide users with metrics that will help them refine their shard keys.

DocumentDB supports only a single primary node for write operations, and does not support sharding.

Scale Up: More powerful instances

The is no practical limit to the size of database supported by MongoDB Atlas, and the largest instance supports up to 128,000 concurrent connections – more than 4x higher than DocumentDB. Like DocumentDB, MongoDB Atlas automatically scales storage capacity as data sets grow. MongoDB Atlas also offers the ability to scale instance sizes automatically in response to workload demand, something that DocumentDB does not currently support. Instead, ops staff must constantly monitor their database instances and manually scale as needed.

Scale Globally: Intelligent data distribution

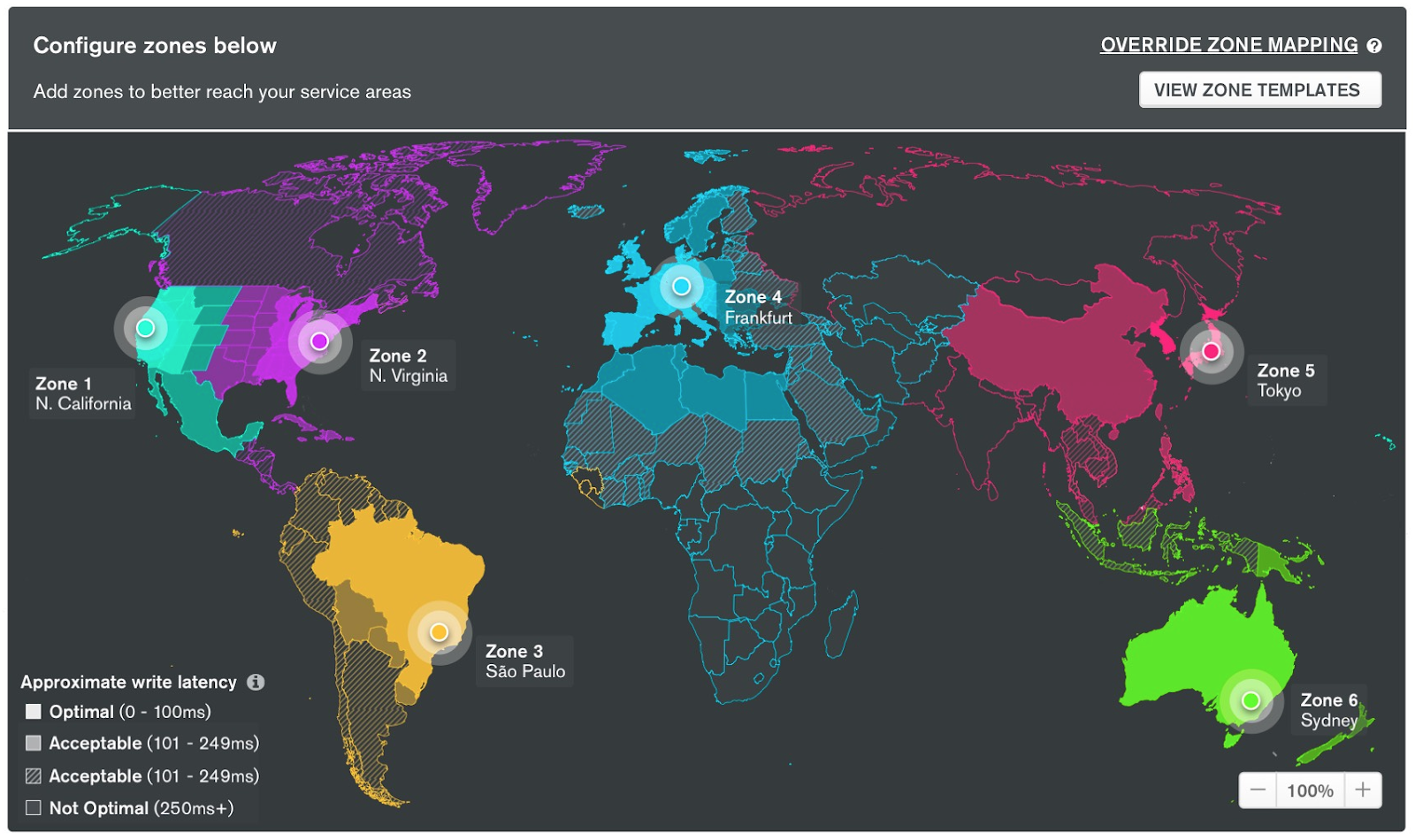

MongoDB Atlas Global Clusters allow organizations with distributed applications to easily geo-partition a single fully managed database to provide low latency reads and writes anywhere in the world. Data can be associated and directed to nodes in specified cloud regions, keeping it in close proximity to local users, and supporting compliance with personal data regulations like the General Data Protection Regulation (GDPR).

Developers can spin up Global Clusters on AWS, Azure, and Google Cloud — with just a few clicks in the MongoDB Atlas UI. With its single primary architecture for write operations, this functionality is unavailable to users of DocumentDB.

Figure 1: Serving always-on, globally distributed, write-everywhere apps with MongoDB Atlas Global Clusters

Resilience in DocumentDB

DocumentDB provides redundancy by replicating 6 copies of the data across Availability Zones, with up to 15 replicas all sharing the same physical storage volumes. DocumentDB will automatically failover to a replica when the primary fails. Amazon states in its documentation that this process typically takes less than 120 seconds, “and often less than 60 seconds”. This means the application will typically experience 60-120 seconds of downtime in the event of a primary failure as it waits for a replica to be promoted. Note that in our testing, we observed some primary elections taking up to 4 minutes – twice as long as Amazon’s advertised interval.

The developer will also need to add exception handling code to catch read and write failures and handle them appropriately during primary elections.

Recently-added Global Clusters do not significantly change this picture, since only one primary node can accept writes. This limitation exposes users to outages in case of a failure of that primary node or of the region hosting it. Manual application reconfiguration is required to recover from any such outage.

How MongoDB Atlas is Different

MongoDB maintains multiple copies of data using replica sets. Replica sets are self-healing with failover and recovery fully automated, so it is not necessary to manually intervene to restore a system in the event of a failure. Replica sets can span multiple regions, with fully automated recovery in the event of a full regional outage.

If the primary replica set member suffers a failure, one of the secondary members is automatically elected to primary, typically within 5 seconds, and the client connections automatically failover to that new primary. Any reads or writes that could not be serviced during the election can be automatically retried by the drivers once a new primary is established, with the MongoDB server enforcing exactly-once processing semantics. Retryable reads and writes enable MongoDB to ensure availability in the face of transient failure conditions, without sacrificing data consistency, while also reducing the amount of exception handling code developers need to write, test, and maintain.