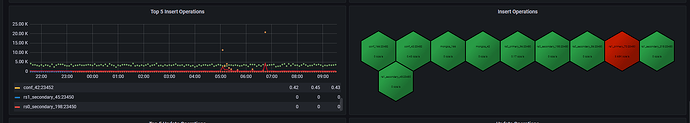

My MongoDB sharded cluster consists of two shards, rs0 and rs1, each with two nodes, one primary and one secondary (originally three nodes, but one was temporarily removed for performance reasons). The issue is that even after the insert operation has completed, rs1(shard1) continues to execute insert operations. The logs show that rs1(shard1) is performing a shard migration, but it has not been successful over and over again. Here are the specific reference details:

MongoDB version is 6.0.5

MongoDB Client: Mongocxx driver v3.7.1(with mongoc driver v1.23.2)

Command used to create the time-series collection:

sh.shardCollection(

"ds.KLineTrade_10min",

{"MetaData.MarketID":1, "MetaData.NameID":1, "MetaData.BidAsk": 1, "Time":1},

{

timeseries: {

timeField: "Time",

metaField: "MetaData",

granularity: "seconds"

}

}

)

Example data:

{

"_id" : ObjectId("6049b848242c00005e005cb3"),

"Time" : 2021-04-21T05:00:00.000+00:00, // Date

"MetaData":

{

"MarketID" : 0, // int32

"NameID": 1, // int32

"BidAsk": 0 // int32, 0: 1-level quote, 1: 5-level quote

},

"Open": 123.34, // double

"Close": 123.2, // double

"High": 123, // double

"Low": 1.2, // double

"Volume": 123.1, // double

"Opi": 122.1, // double

"AVPrice": 12.1, // double

"Scale": 0.5 // double

}

Error log:

{"t":{"$date":"2023-04-25T03:39:04.287+08:00"},"s":"I", "c":"SH_REFR", "id":4619901, "ctx":"CatalogCache-7850","msg":"Refreshed cached collection","attr":{"namespace":"ds.system.buckets.KLineTrade_30min","lookupSinceVersion":"13|1||64468e997daa9be5ee619980||Timestamp(1682345625, 41)","newVersion":"{ chunkVersion: { t: Timestamp(1682345625, 41), e: ObjectId('64468e997daa9be5ee619980'), v: Timestamp(13, 1) }, forcedRefreshSequenceNum: 269733, epochDisambiguatingSequenceNum: 269668 }","timeInStore":"{ chunkVersion: \"None\", forcedRefreshSequenceNum: 269732, epochDisambiguatingSequenceNum: 269667 }","durationMillis":2}}

{"t":{"$date":"2023-04-25T03:39:04.287+08:00"},"s":"I", "c":"SHARDING", "id":5087102, "ctx":"conn112710","msg":"Recipient failed to copy oplog entries for retryable writes and transactions from donor","attr":{"namespace":"ds.system.buckets.KLineTrade_10min","migrationSessionId":"rs0_rs1_6446dacb7daa9be5ee17e06c","fromShard":"rs0","error":"Destructor cleaning up thread"}}

{"t":{"$date":"2023-04-25T03:39:04.288+08:00"},"s":"I", "c":"MIGRATE", "id":22000, "ctx":"migrateThread","msg":"Starting receiving end of chunk migration","attr":{"chunkMin":{"meta.MarketID":0,"meta.NameID":11064,"meta.BidAsk":1,"control.min.Time":{"$date":"2020-11-17T01:00:00.000Z"}},"chunkMax":{"meta.MarketID":0,"meta.NameID":11276,"meta.BidAsk":1,"control.min.Time":{"$date":"2018-03-16T01:00:00.000Z"}},"namespace":"ds.system.buckets.KLineTrade_30min","fromShard":"rs0","epoch":{"$oid":"64468e997daa9be5ee619980"},"sessionId":"rs0_rs1_6446dad87daa9be5ee17e172","migrationId":{"uuid":{"$uuid":"db42e46f-ca26-453f-9290-cbae2139a93a"}}}}

{"t":{"$date":"2023-04-25T03:39:04.292+08:00"},"s":"I", "c":"CONNPOOL", "id":22576, "ctx":"ShardRegistry","msg":"Connecting","attr":{"hostAndPort":"shard0-primary.mg.com:23450"}}

{"t":{"$date":"2023-04-25T03:39:05.289+08:00"},"s":"I", "c":"COMMAND", "id":51803, "ctx":"conn112710","msg":"Slow query","attr":{"type":"command","ns":"admin.$cmd","command":{"_recvChunkStatus":"ds.system.buckets.KLineTrade_30min","waitForSteadyOrDone":true,"sessionId":"rs0_rs1_6446dad87daa9be5ee17e172","$clusterTime":{"clusterTime":{"$timestamp":{"t":1682365144,"i":5}},"signature":{"hash":{"$binary":{"base64":"crIebqqm/pL9InFc9MaG9wQGCqY=","subType":"0"}},"keyId":7163442577083990019}},"$configTime":{"$timestamp":{"t":1682365144,"i":2}},"$topologyTime":{"$timestamp":{"t":0,"i":1}},"$db":"admin"},"numYields":0,"reslen":853,"locks":{},"remote":"<ip_addr>:60426","protocol":"op_msg","durationMillis":1000}}

{"t":{"$date":"2023-04-25T03:39:06.084+08:00"},"s":"I", "c":"MIGRATE", "id":21999, "ctx":"batchApplier","msg":"Batch application failed","attr":{"error":"DuplicateKey{ keyPattern: { _id: 1 }, keyValue: { _id: ObjectId('5e6adb107db2a2e5ee632b22') } }: Insert of { _id: ObjectId('5e6adb107db2a2e5ee632b22'), control: { version: 2, min: { _id: ObjectId('64469f35db1f646f8c56b871'), Time: new Date(1584061200000), Open: 7.699999809265137, Close: 8.260000228881836, High: 8.279999732971191, Low: 7.690000057220459, Volume: 2180056.0, Opi: 88467440.0, AVPrice: 7.971197605133057, Scale: 0.3896368145942688 }, max: { _id: ObjectId('64469f35db1f646f8c56b878'), Time: new Date(1584081000000), Open: 8.510000228881836, Close: 8.5, High: 8.640000343322754, Low: 8.399999618530273, Volume: 11104572.0, Opi: 273989728.0, AVPrice: 8.545262336730957, Scale: 0.636924147605896 }, count: 8 }, meta: { BidAsk: 1, MarketID: 0, NameID: 11142 }, data: { Time: BinData(7, 0900C02D83D170010000830DE86E03000000000D000000002E9302FDB74C0A000000000E0000000000000000), Volume: BinData(7, 010000000080272E6541937D76A00D10BA0D00BD243C02D08505003D930A005CDF5A008EA6D3010000000000), _id: BinData(7, 070064469F35DB1F646F8C56B87180080200000000000000), Scale: BinData(7, 0100000000C0AE61E43F86FEFFFFFFBB811D000E000000D06E0800FEFFFFFF47A53B00FEFFFFFF2B5559000E00000078D05300FEFFFFFF2BC37F00FEFFFFFFDB3B980000), High: BinData(7, 0100000000205C8F2040856800000070C4F5080E0000007C140E00FEFFFFFF0BD703000E00000034330300FEFFFFFF67660600FEFFFFFFAB47010000), Opi: BinData(7, 0100000000C09F179541930DB99B3D406A15100D62D82E8096F10B0D6EAA2F801FCD080E046C320000000000), Low: BinData(7, 0100000000608FC21E40846887999982295C0068475C8F705C8F020E00000064660600FEFFFFFF2F330300FEFFFFFFAF47010000), AVPrice: BinData(7, 0100000000A081E21F408468C721DC70A41F086818AE0B70D82E020E000000B8D60200FEFFFFFF47130500FEFFFFFF3BB8020000), Close: BinData(7, 0100000000205C8F204085FEFFFFFFAB4701000E000000C0F50800FEFFFFFF6366060068879999705C8F020E000000D8A30000FEFFFFFF13AE070000), Open: BinData(7, 0100000000C0CCCC1E40850E000000EC513800FEFFFFFFAB4701000E000000EC510800FEFFFFFF8FC2050068C8F508703433030E000000B047010000) } } failed. :: caused by :: E11000 duplicate key error collection: ds.system.buckets.KLineTrade_30min dup key: { _id: ObjectId('5e6adb107db2a2e5ee632b22') }"}}

{"t":{"$date":"2023-04-25T03:39:06.084+08:00"},"s":"I", "c":"CONNPOOL", "id":22566, "ctx":"ShardRegistry","msg":"Ending connection due to bad connection status","attr":{"hostAndPort":"shard0-primary.mg.com:23450","error":"CallbackCanceled: Callback was canceled","numOpenConns":1}}

{"t":{"$date":"2023-04-25T03:39:06.084+08:00"},"s":"I", "c":"SHARDING", "id":22080, "ctx":"migrateThread","msg":"About to log metadata event","attr":{"namespace":"changelog","event":{"_id":"SHDX-I7-73:23450-2023-04-25T03:39:06.084+08:00-6446dada9394fa0f1351f214","server":"SHDX-I7-73:23450","shard":"rs1","clientAddr":"","time":{"$date":"2023-04-24T19:39:06.084Z"},"what":"moveChunk.to","ns":"ds.system.buckets.KLineTrade_30min","details":{"step 1 of 8":1,"step 2 of 8":0,"step 3 of 8":2,"min":{"meta.MarketID":0,"meta.NameID":11064,"meta.BidAsk":1,"control.min.Time":{"$date":"2020-11-17T01:00:00.000Z"}},"max":{"meta.MarketID":0,"meta.NameID":11276,"meta.BidAsk":1,"control.min.Time":{"$date":"2018-03-16T01:00:00.000Z"}},"to":"rs1","from":"rs0","note":"aborted"}}}}

{"t":{"$date":"2023-04-25T03:39:06.103+08:00"},"s":"I", "c":"MIGRATE", "id":21998, "ctx":"migrateThread","msg":"Error during migration","attr":{"error":"migrate failed: Location51008: _migrateClone failed: :: caused by :: operation was interrupted"}}

{"t":{"$date":"2023-04-25T03:39:06.104+08:00"},"s":"I", "c":"SHARDING", "id":5087102, "ctx":"migrateThread","msg":"Recipient failed to copy oplog entries for retryable writes and transactions from donor","attr":{"namespace":"ds.system.buckets.KLineTrade_30min","migrationSessionId":"rs0_rs1_6446dad87daa9be5ee17e172","fromShard":"rs0","error":"migrate failed: Location51008: _migrateClone failed: :: caused by :: operation was interrupted"}}

{"t":{"$date":"2023-04-25T03:39:06.104+08:00"},"s":"I", "c":"COMMAND", "id":51803, "ctx":"conn112710","msg":"Slow query","attr":{"type":"command","ns":"admin.$cmd","command":{"_recvChunkStatus":"ds.system.buckets.KLineTrade_30min","waitForSteadyOrDone":true,"sessionId":"rs0_rs1_6446dad87daa9be5ee17e172","$clusterTime":{"clusterTime":{"$timestamp":{"t":1682365145,"i":14082}},"signature":{"hash":{"$binary":{"base64":"tNt3TIhZF2HtKCEhbfHI8f0kDaY=","subType":"0"}},"keyId":7163442577083990019}},"$configTime":{"$timestamp":{"t":1682365144,"i":2}},"$topologyTime":{"$timestamp":{"t":0,"i":1}},"$db":"admin"},"numYields":0,"reslen":960,"locks":{},"remote":"<ip_addr>:60426","protocol":"op_msg","durationMillis":814}}

{"t":{"$date":"2023-04-25T03:39:06.104+08:00"},"s":"I", "c":"MIGRATE", "id":5004703, "ctx":"migrateThread","msg":"clearReceiveChunk ","attr":{"currentKeys":"[{ meta.MarketID: 0, meta.NameID: 11064, meta.BidAsk: 1, control.min.Time: new Date(1605574800000) }, { meta.MarketID: 0, meta.NameID: 11276, meta.BidAsk: 1, control.min.Time: new Date(1521162000000) })"}}

{"t":{"$date":"2023-04-25T03:39:06.122+08:00"},"s":"I", "c":"MIGRATE", "id":22026, "ctx":"range-deleter","msg":"Submitting range deletion task","attr":{"deletionTask":{"_id":{"$uuid":"db42e46f-ca26-453f-9290-cbae2139a93a"},"nss":"ds.system.buckets.KLineTrade_30min","collectionUuid":{"$uuid":"ec1c91b4-e024-4a50-9edc-45dc9873ee18"},"donorShardId":"rs0","range":{"min":{"meta.MarketID":0,"meta.NameID":11064,"meta.BidAsk":1,"control.min.Time":{"$date":"2020-11-17T01:00:00.000Z"}},"max":{"meta.MarketID":0,"meta.NameID":11276,"meta.BidAsk":1,"control.min.Time":{"$date":"2018-03-16T01:00:00.000Z"}}},"whenToClean":"now","timestamp":{"$timestamp":{"t":1682365144,"i":5}},"numOrphanDocs":63381},"migrationId":{"uuid":{"$uuid":"db42e46f-ca26-453f-9290-cbae2139a93a"}}}}

{"t":{"$date":"2023-04-25T03:39:06.122+08:00"},"s":"I", "c":"MIGRATE", "id":21990, "ctx":"range-deleter","msg":"Scheduling deletion of the collection's specified range","attr":{"namespace":"ds.system.buckets.KLineTrade_30min","range":"[{ meta.MarketID: 0, meta.NameID: 11064, meta.BidAsk: 1, control.min.Time: new Date(1605574800000) }, { meta.MarketID: 0, meta.NameID: 11276, meta.BidAsk: 1, control.min.Time: new Date(1521162000000) })"}}

{"t":{"$date":"2023-04-25T03:39:07.830+08:00"},"s":"E", "c":"SHARDING", "id":6419611, "ctx":"range-deleter","msg":"Cached orphan documents count became negative, resetting it to 0","attr":{"collectionUUID":{"uuid":{"$uuid":"ec1c91b4-e024-4a50-9edc-45dc9873ee18"}},"numOrphanDocs":-816,"delta":-64197,"numRangeDeletionTasks":1}}