Hi @Zarif_Alimov,

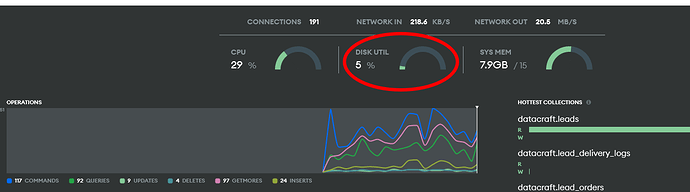

Why such a sudden and drastic change to DISK UTIL (This is Disk I/O I assume)? What sends it over the edge?

Based off the title and the examples you have provided I assume your main concern here is the sudden and drastic change in the Disk Util metric for the additional “marginal” amount of data. However, please correct me if I am wrong here. This would be hard to determine with the information currently at hand but there could be many reasons for this. I have provided more details below that may help you determine or narrow down what the issue may be.

In saying so, the Disk Util % metric is defined in Atlas as:

The percentage of time during which requests are being issued to and serviced by the partition. This includes requests from any process, not just MongoDB processes.

You can see definition of each metric within the metrics page of your cluster(s) and select the info icon as detailed here in this post.

As noted in the Use Case column on the same Available Charts page for the Util % metric:

Monitor whether utilization is high. Determine whether to increase the provisioned IOPS or upgrade the cluster.

You can check the Disk IOPS metric along with the Disk Util % metric to see if there is any correlation there to see if you are hitting the IOPS limit configured for your cluster. I would also like to additionally note that some storage configurations have the ability to utilise burst credits which allow for temporary increase in the IOPS for a cluster. I would also recommend going over the overall Fix IOPS issues to see if this helps with this issue as it contains further details regarding:

However, it is important to note that monitoring the Disk IOPS metric alone may not be sufficient to conclude that it is the issue. Instead, I would recommend reviewing multiple metrics along with the Disk IOPS metric to narrow down or come to a more accurate conclusion. There are some scenarios in which IOPS do not get exhausted but spikes in Disk Util may be present due to more expensive operations that cause higher I/O wait (visible within the System CPU metrics) or increased Disk Latency.

Additionally, the I/O request size should also be considered here. As another example, a singular operation to insert a large document may only utilise minimal Disk IOPS but could take some time to complete which could then lead to increased Util %.

And is there a way to monitor it on the application level to scale it back somehow if I see it jump?

I am not too sure about the application level monitoring for this but from the Atlas end, you can configure alerts to be sent when a certain criteria is met. Please see the Configure Alert Settings page for more information about this.

Hope this helps.

Regards,

Jason