After inspecting the plan of both scenario. This is my understanding.

Your query want to the number of documents that have "2024" in the list of activeDay and 0 < os < 9 for each appId. Take note that even if "2024" appears more than 1 time in activeDay array, you only count it once.

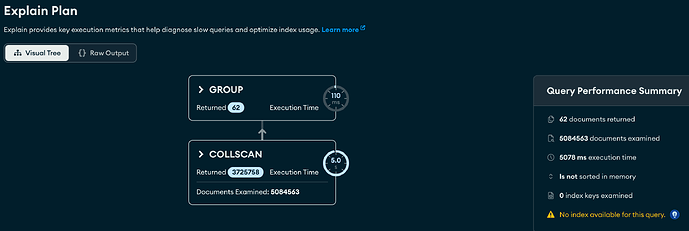

Let’s do COLLSCAN, you inspect the document one by one, if there is any "2024" in activeDay and 0 < os < 9, you pass that document (or more precise, the appId value) to the next stage.

Next group stage then count the number of apperance for each appId value then return results.

Quite straigthforward. Even if there are multiple "2024" value in activeDay, as soon as we encounter the first one, we can safely skip the rest of the array and mark current document as satisfied the filter.

Now we create muti-key index on { appId: 1, os: 1, activeDay: 1 }. Because activeDay is an array of values, the index will unwind the array so that it can index them. Therefore, multiple index keys can point to a single document, I think that’s why it’s called “multi-key”.

For example:

// Document

{

_id: "a", // just for demonstation

appId: 999,

os: 1,

activeDay: ["2024", "202401", "202402", "2024"]

}

The multi-key index will index following keys

{ appId: 999, os: 1, activeDay: "2024" } => { _id: a }

{ appId: 999, os: 1, activeDay: "202401" } => { _id: a }

{ appId: 999, os: 1, activeDay: "202402" } => { _id: a }

{ appId: 999, os: 1, activeDay: "2024" } => { _id: a }

Let’s do the same query again using this index. As you can see, I purposely put "2024" twice to making the point.

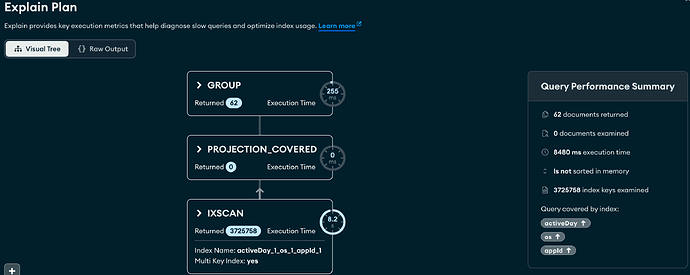

Now we inspect the index key one by one, because all needed field: os and activeDay for filter and appId for group are all indexed, the index has all infomation to perform the query and no need to look at the actual document, a.k.a. PROJECTION_COVERED.

If value of activeDay is "2024" and 0 < os < 9, then we pass the index key to the next stage.

Now you can see with above example, there are 2 { appId: 999, os: 1, activeDay: "2024" } => { _id: a } key that satisfy the query and pass to the next stage, even though it’s in the same document.

So because we only need to count the number of document, we need to eliminate all duplicated references ({ _id: a }) so that the group stage only need to count the apperance then return results. That’s the unique stage in the execution plan.

You can see that the stage take 8185 - 4574 = 3611 ms only to make sure there are no duplicate document reference after index scan. And in your case, I can tell that there are no such case as above because of "dupsDropped" : 0.0. But the database engine does not know that fact, and therefore, it has to run unique stage.

Hope this can help you.