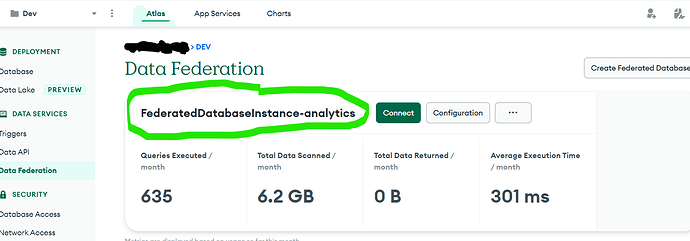

Recently I have using this tutorial ([How to Automate Continuous Data Copying from MongoDB to S3 | MongoDB](https://How to Automate Continuous Data Copying from MongoDB to S3)) to try to copy data from assessments(collection name)>analytics(db name)>dev(cluster name) to a s3 bucket. I first created a federated database called FederatedDatabaseInstance-analytics, I then created a a trigger with this function:

exports = function () {

const datalake = context.services.get("FederatedDatabaseInstance-analytics");

const db = datalake.db("analytics");

const coll = db.collection("assessments");

const pipeline = [

/*{

$match: {

"time": {

$gt: new Date(Date.now() - 60 * 60 * 1000),

$lt: new Date(Date.now())

}

}

},

{

"$out": {

"s3": {

"bucket": "322104163088-mongodb-data-ingestion",

"region": "eu-west-2",

"filename": { "$concat": [

"analytics",

"$_id"

]

},

"format": {

"name": "JSON",

"maxFileSize": "40GB"

//"maxRowGroupSize": "30GB" //only applies to parquet

}

}

}

}*/

{

"$out": {

"s3": {

"bucket": "322104163088-mongodb-data-ingestion",

"region": "eu-west-2",

"filename": "analytics/",

"format": {

"name": "json",

"maxFileSize": "100GB"

}

}

}

}

];

return coll.aggregate(pipeline);

};

The thing is, I get no errors runnings it but nothing appears on my bucket