Hi,

Here is the summary of our case :

- we are using MongoDb Atlas (M30).

- we have a collection have 400K records (we are expecting it will be about 40M)

- We are using google cloud function, node.js and mongoose.

- For connect to MongoDB Atlas, we are using srv connection string, (‘mongodb+srv://…’ ) and ssl is enabled.

- MongoDB Atlas and Google Cloud function are in the same region.

- We are using lean() option for queries to get data in json format and save memory.

- We are adding projection to get just fields we need.

- We use index for our query and we tested and been sure it works.

- here is our index:

{

brand: 1,

facture: 1,

results.content.algo1.version: 1,

results.content.algo1.bestOf.segments.cluster: 1

_id: 1,

}

note that: we add _id to index just for trying _id pagination to get data.

- Our query is something like that:

find({

brand: "MY_BRAND",

facture: "MY_FACTURE",

'results.content.algo1.version': MY_VERSION,

'results.content.algo1.bestOf.segments.cluster': MY_CLUSTER

})

projection({

'platforms.platform1.devices': 1,

'platforms.platform1.devices': 1,

})

.sort({_id:1})

.limit(1000)

- and generally about 50k-100k record is matching from queries in total(of course we will use multiple queries with limit or cursor). (this is for 400k record, when we reach 40M record it will be parallelly increase)

- “segments” and “devices” are both array. so we couldn’t build a full covered index.

So here is the problem:

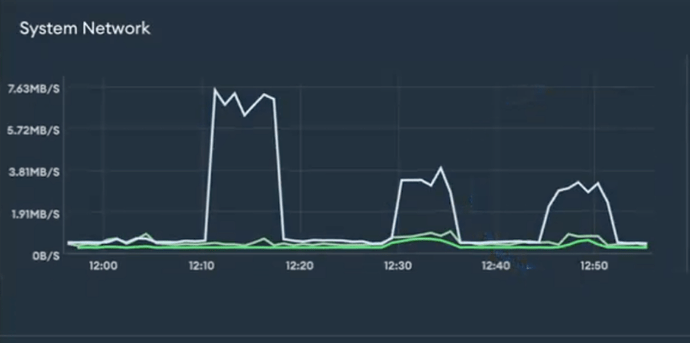

- When we try to querying data from GCF, it takes 3-4 seconds to response for a single query(limit 1000). But it just takes maximum 300-400ms on MongoDB atlas sheel and also when we monitor the same queries on Atlas console we saw querying only takes 300-400 ms.

- The size of result can be about 10-15MB. We know it is huge but i am not sure it should caused 10x times higher time.

- We tried cursor with batchSize and also _id pagination to get data fast. But it didn’t change.

- We know, trying the get smaller data will be the best. But we need that. In other hand, decreasing the limit can decrease the response time but in total it takes much time and caused so much query count for whole operation. Because we have to get all data at the end we need.

We may missing something about dealing with huge data on MongoDB.

So, do you think the gap between query and response time (300ms → 3seconds) is normal because of the size of returning data?

And do you have any other suggestion for handling this situation ?

Thank you.