Hi all,

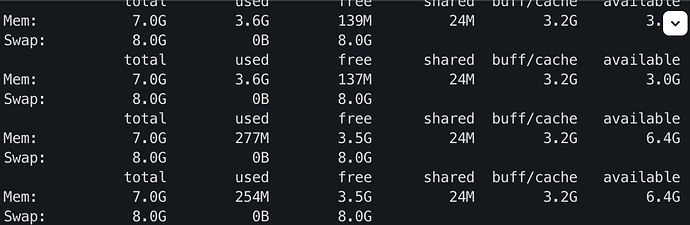

I am running a single instance of MongoDB 5.0.5 on a Virtual Machine running CentOS7 with 8Gb of RAM available. The machine is expected to receive a large number of connection and as I have learnt from here MongoDB roughly allocates 1Mb of memory per created connection, i.e. giving me a rough estimate of ~7000 max connections for the single instance. Is this reasoning correct? I noticed quickly that adding much more connection than that quickly leads to OOM kills. Adding a swap space prevents the OOM kills, but leads to DB crashes once too much memory is allocated on the swap. While I guess this is expected behaviour in the case of having >7000 connections, I am also seeing DB instabilities for far less connections (in the order of 4000) which should not lead to any problems as far as I can tell. Specifically, the database seems to become unreachable, even though enough memory seems to be available as can be seen in this free -h display:

The crash happens when there is still around 3Gb available, after wich the memory seems to be freed again. I also checked the number of available connections, but this also seems to be more than enough. In the /var/log/mongodb/mongod.log file I get the following BACKTRACE:

{"t":{"$date":"2022-01-07T02:11:19.677+01:00"},"s":"I", "c":"CONTROL", "id":31380, "ctx":"conn14209","msg":"BACKTRACE","attr":{"bt":{"backtrace":[{"a":"55C16D6730D5","b":"55C16980D000","o":"3E660D5","s":"_ZN5mongo18stack_trace_detail12_GLOBAL__N_119printStackTraceImplERKNS1_7OptionsEPNS_14StackTraceSinkE.constprop.357","s+":"215"},{"a":"55C16D675B69","b":"55C16980D000","o":"3E68B69","s":"_ZN5mongo15printStackTraceEv","s+":"29"},{"a":"55C16D66E3D6","b":"55C16980D000","o":"3E613D6","s":"_ZN5mongo29reportOutOfMemoryErrorAndExitEv","s+":"76"},{"a":"55C16D659991","b":"55C16980D000","o":"3E4C991","s":"_ZN5mongo12mongoReallocEPvm","s+":"21"},{"a":"55C16AD3491B","b":"55C16980D000","o":"152791B","s":"_ZN5mongo15BasicBufBuilderINS_21SharedBufferAllocatorEE15grow_reallocateEi","s+":"6B"},{"a":"55C16AD53C11","b":"55C16980D000","o":"1546C11","s":"_ZN5mongo3rpc17OpMsgReplyBuilder12reserveBytesEm","s+":"51"},{"a":"55C16AD6ABC7","b":"55C16980D000","o":"155DBC7","s":"_ZN5mongo12_GLOBAL__N_114RunCommandImpl3runEv","s+":"F7"},{"a":"55C16AD6CCAE","b":"55C16980D000","o":"155FCAE","s":"_ZN5mongo12_GLOBAL__N_119ExecCommandDatabase12_commandExecEv","s+":"1CE"},{"a":"55C16AD75840","b":"55C16980D000","o":"1568840","s":"_ZZN5mongo12_GLOBAL__N_114executeCommandESt10shared_ptrINS0_13HandleRequest16ExecutionContextEEENUlvE0_clEv","s+":"450"},{"a":"55C16AD77A30","b":"55C16980D000","o":"156AA30","s":"_ZZN5mongo15unique_functionIFvPNS_14future_details15SharedStateBaseEEE8makeImplIZNS1_10FutureImplINS1_8FakeVoidEE16makeContinuationIvZZNOS9_4thenIZNS_12_GLOBAL__N_114executeCommandESt10shared_ptrINSC_13HandleRequest16ExecutionContextEEEUlvE0_EEDaOT_ENKUlvE1_clEvEUlPNS1_15SharedStateImplIS8_EESN_E_EENS7_ISI_EEOT0_EUlS3_E_EEDaSJ_EN12SpecificImpl4callEOS3_","s+":"80"},{"a":"55C16AD32437","b":"55C16980D000","o":"1525437","s":"_ZN5mongo14future_details15SharedStateBase20transitionToFinishedEv","s+":"147"},{"a":"55C16AD7BDF2","b":"55C16980D000","o":"156EDF2","s":"_ZN5mongo14future_details10FutureImplINS0_8FakeVoidEE11generalImplIZNOS3_17propagateResultToEPNS0_15SharedStateImplIS2_EEEUlOS2_E_ZNOS3_17propagateResultToES7_EUlONS_6StatusEE0_ZNOS3_17propagateResultToES7_EUlvE1_EEDaOT_OT0_OT1_","s+":"152"},{"a":"55C16AD6F2BC","b":"55C16980D000","o":"15622BC","s":"_ZZN5mongo15unique_functionIFvPNS_14future_details15SharedStateBaseEEE8makeImplIZNS1_10FutureImplINS1_8FakeVoidEE16makeContinuationIvZZNOS9_4thenIZNS_12_GLOBAL__N_114executeCommandESt10shared_ptrINSC_13HandleRequest16ExecutionContextEEEUlvE_EEDaOT_ENKUlvE1_clEvEUlPNS1_15SharedStateImplIS8_EESN_E_EENS7_ISI_EEOT0_EUlS3_E_EEDaSJ_EN12SpecificImpl4callEOS3_","s+":"AC"},{"a":"55C16AD32437","b":"55C16980D000","o":"1525437","s":"_ZN5mongo14future_details15SharedStateBase20transitionToFinishedEv","s+":"147"},{"a":"55C16AD7622A","b":"55C16980D000","o":"156922A","s":"_ZN5mongo12_GLOBAL__N_114executeCommandESt10shared_ptrINS0_13HandleRequest16ExecutionContextEE","s+":"22A"},{"a":"55C16AD76C5E","b":"55C16980D000","o":"1569C5E","s":"_ZN5mongo12_GLOBAL__N_116receivedCommandsESt10shared_ptrINS0_13HandleRequest16ExecutionContextEE","s+":"1BE"},{"a":"55C16AD77976","b":"55C16980D000","o":"156A976","s":"_ZN5mongo12_GLOBAL__N_115CommandOpRunner3runEv","s+":"36"},{"a":"55C16AD71CA7","b":"55C16980D000","o":"1564CA7","s":"_ZN5mongo23ServiceEntryPointCommon13handleRequestEPNS_16OperationContextERKNS_7MessageESt10unique_ptrIKNS0_5HooksESt14default_deleteIS8_EE","s+":"307"},{"a":"55C16AD61B3F","b":"55C16980D000","o":"1554B3F","s":"_ZN5mongo23ServiceEntryPointMongod13handleRequestEPNS_16OperationContextERKNS_7MessageE","s+":"4F"},{"a":"55C16ADB9EF7","b":"55C16980D000","o":"15ACEF7","s":"_ZN5mongo9transport19ServiceStateMachine4Impl14processMessageEv","s+":"127"},{"a":"55C16ADBA227","b":"55C16980D000","o":"15AD227","s":"_ZZNO5mongo14future_details10FutureImplINS0_8FakeVoidEE4thenIZNS_9transport19ServiceStateMachine4Impl12startNewLoopERKNS_6StatusEEUlvE0_EEDaOT_ENKUlOS2_E_clESE_.isra.718","s+":"27"},{"a":"55C16ADBCB58","b":"55C16980D000","o":"15AFB58","s":"_ZN5mongo9transport19ServiceStateMachine4Impl12startNewLoopERKNS_6StatusE","s+":"1A8"},{"a":"55C16ADBD05F","b":"55C16980D000","o":"15B005F","s":"_ZZN5mongo15unique_functionIFvNS_6StatusEEE8makeImplIZNS_9transport19ServiceStateMachine4Impl15scheduleNewLoopES1_EUlS1_E_EEDaOT_EN12SpecificImpl4callEOS1_","s+":"7F"},{"a":"55C16CE38DA1","b":"55C16980D000","o":"362BDA1","s":"_ZZN5mongo15unique_functionIFvNS_6StatusEEE8makeImplIZNS_9transport26ServiceExecutorSynchronous18runOnDataAvailableERKSt10shared_ptrINS5_7SessionEES3_EUlS1_E_EEDaOT_EN12SpecificImpl4callEOS1_","s+":"41"},{"a":"55C16B485B83","b":"55C16980D000","o":"1C78B83","s":"_ZZN5mongo15unique_functionIFvvEE8makeImplIZNS_9transport15ServiceExecutor8scheduleENS0_IFvNS_6StatusEEEEEUlvE_EEDaOT_EN12SpecificImpl4callEv","s+":"33"},{"a":"55C16CE38F5B","b":"55C16980D000","o":"362BF5B","s":"_ZZN5mongo15unique_functionIFvvEE8makeImplIZNS_9transport26ServiceExecutorSynchronous12scheduleTaskES2_NS4_15ServiceExecutor13ScheduleFlagsEEUlvE0_EEDaOT_EN12SpecificImpl4callEv","s+":"BB"},{"a":"55C16CE3CE0C","b":"55C16980D000","o":"362FE0C","s":"_ZZN5mongo15unique_functionIFvvEE8makeImplIZNS_25launchServiceWorkerThreadES2_EUlvE2_EEDaOT_EN12SpecificImpl4callEv","s+":"5C"},{"a":"55C16CE3CE7C","b":"55C16980D000","o":"362FE7C","s":"_ZN5mongo12_GLOBAL__N_17runFuncEPv","s+":"1C"},{"a":"7F9B9F94CEA5","b":"7F9B9F945000","o":"7EA5","s":"start_thread","s+":"C5"},{"a":"7F9B9F675B0D","b":"7F9B9F577000","o":"FEB0D","s":"clone","s+":"6D"}],"processInfo":{"mongodbVersion":"5.0.5","gitVersion":"d65fd89df3fc039b5c55933c0f71d647a54510ae","compiledModules":[],"uname":{"sysname":"Linux","release":"3.10.0-1160.45.1.el7.x86_64","version":"#1 SMP Wed Oct 13 17:20:51 UTC 2021","machine":"x86_64"},"somap":[{"b":"55C16980D000","elfType":3,"buildId":"BFFEFC1C655BB329C72D082A0F159A3CE5FBF49F"},{"b":"7F9B9F945000","path":"/lib64/libpthread.so.0","elfType":3,"buildId":"E10CC8F2B932FC3DAEDA22F8DAC5EBB969524E5B"},{"b":"7F9B9F577000","path":"/lib64/libc.so.6","elfType":3,"buildId":"8DA9707D518E88179B1B2903CD846C82102D84C1"}]}}}}

The ‘reportOutOfMemoryErrorAndExitEv’ message seems to indicate to me that this is some kind of memory issue, but as you see from the screenshot above more than enough should be available at this point. I also checked if the mongod process is being OOM killed, but this is not the case as can be seen in the /var/log/messages file:

Jan 7 01:35:42 vm001 systemd: Started MongoDB Database Server.

Jan 7 02:11:20 vm001 systemd: mongod.service: main process exited, code=exited, status=14/n/a

Jan 7 02:11:20 vm001 systemd: Unit mongod.service entered failed state.

Jan 7 02:11:20 vm001 systemd: mongod.service failed.

Previously, I also experienced the following errors:

{"t":{"$date":"2022-01-06T23:29:44.321+01:00"},"s":"I", "c":"NETWORK", "id":22943, "ctx":"listener","msg":"Connection accepted","attr":{"remote":"128.142.58.50:40884","uuid":"433b7d36-d00f-4bde-988d-2c6725ccc646","connectionId":10408,"connectionCount":10354}}

{"t":{"$date":"2022-01-06T23:29:44.321+01:00"},"s":"E", "c":"-", "id":4850900, "ctx":"listener","msg":"pthread_create failed","attr":{"error":"Resource temporarily unavailable"}}

{"t":{"$date":"2022-01-06T23:29:44.321+01:00"},"s":"E", "c":"-", "id":22948, "ctx":"listener","msg":"Thread creation failed","attr":{"error":"pthread_create failed"}}

{"t":{"$date":"2022-01-06T23:29:44.321+01:00"},"s":"W", "c":"EXECUTOR", "id":22993, "ctx":"listener","msg":"Unable to schedule a new loop for the service state machine","attr":{"error":{"code":1,"codeName":"InternalError","errmsg":"Failed to create service entry worker thread: pthread_create failed"}}}

I already tried increasing all the possible ulimits that may play a role. Here is the output of some relevant files/commands

1) Return-Limits of mongod process

[mongod #167766 -- limits]

Limit Soft Limit Hard Limit Units

Max cpu time unlimited unlimited seconds

Max file size unlimited unlimited bytes

Max data size unlimited unlimited bytes

Max stack size 8388608 unlimited bytes

Max core file size 0 unlimited bytes

Max resident set unlimited unlimited bytes

Max processes 1048576 1048576 processes

Max open files 1048576 1048576 files

Max locked memory unlimited unlimited bytes

Max address space unlimited unlimited bytes

Max file locks unlimited unlimited locks

Max pending signals 28400 28400 signals

Max msgqueue size 819200 819200 bytes

Max nice priority 0 0

Max realtime priority 0 0

Max realtime timeout unlimited unlimited us

2) ulimit -a

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 28400

max locked memory (kbytes, -l) unlimited

max memory size (kbytes, -m) unlimited

open files (-n) 1048576

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) unlimited

cpu time (seconds, -t) unlimited

max user processes (-u) 1048576

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

3) /etc/security/limits.conf

* soft nofile 1048576

* hard nofile 1048576

* soft nproc 1048576

* hard nproc 1048576

* soft fsize unlimited

* hard fsize unlimited

* soft cpu unlimited

* hard cpu unlimited

* soft rss unlimited

* hard rss unlimited

* soft memlock unlimited

* hard memlock unlimited

* soft stack unlimited

* hard stack unlimited

* soft as unlimited

* hard as unlimited

4) /lib/systemd/system/mongod.service

[Unit]

Description=MongoDB Database Server

Documentation=https://docs.mongodb.org/manual

After=network-online.target

Wants=network-online.target

[Service]

User=mongod

Group=mongod

Environment="OPTIONS=-f /etc/mongod.conf"

EnvironmentFile=-/etc/sysconfig/mongod

ExecStart=/usr/bin/mongod $OPTIONS

ExecStartPre=/usr/bin/mkdir -p /var/run/mongodb

ExecStartPre=/usr/bin/chown mongod:mongod /var/run/mongodb

ExecStartPre=/usr/bin/chmod 0755 /var/run/mongodb

PermissionsStartOnly=true

PIDFile=/var/run/mongodb/mongod.pid

Type=forking

# file size

LimitFSIZE=infinity

# cpu time

LimitCPU=infinity

# virtual memory size

LimitAS=infinity

# open files

LimitNOFILE=1048576

# processes/threads

LimitNPROC=1048576

# locked memory

LimitMEMLOCK=infinity

# total threads (user+kernel)

TasksMax=infinity

TasksAccounting=false

# Recommended limits for mongod as specified in

# https://docs.mongodb.com/manual/reference/ulimit/#recommended-ulimit-settings

[Install]

WantedBy=multi-user.target

5) Added 99-mongodb-nproc.conf in /etc/security/limits.d

* soft nproc 1048576

* hard nproc 1048576

6) /proc/sys/kernel/threads-max

1000007) /proc/sys/kernel/pid_max

41943038) /etc/sysctl.conf

fs.file-max=2147483584

vm.swappiness=20

vm.max_map_count=262144

net.netfilter.nf_conntrack_max = 32768

net.core.somaxconn=1024

net.core.netdev_max_backlog=2000

net.ipv4.tcp_max_syn_backlog=2048

net.ipv4.tcp_tw_recycle=0

net.ipv4.tcp_tw_reuse=0

net.ipv4.tcp_orphan_retries=1

net.ipv4.tcp_fin_timeout=25

net.ipv4.tcp_max_orphans=8192

net.ipv4.ip_local_port_range=1024 65535

9) /etc/mongod.conf

# where to write logging data.

systemLog:

destination: file

logAppend: true

path: /var/log/mongodb/mongod.log

# Where and how to store data.

storage:

dbPath: /mnt/mongo

journal:

enabled: true

# engine:

# wiredTiger:

# how the process runs

processManagement:

fork: true # fork and run in background

pidFilePath: /var/run/mongodb/mongod.pid # location of pidfile

timeZoneInfo: /usr/share/zoneinfo

# network interfaces

net:

port: 27017

bindIpAll: true

I am also aware of the net.maxIncomingConnections option in /etc/mongod.conf, but as the crash occurs well below the default value of 65536 connections, this does not seem to be issue.

As you have seen, I tried to increase all relevant limits to prevent these kind of crashes, but at this point I am not sure what else could go wrong. Is there anything I am missing? Any help is greatly appreciated!