Hello Mongo Folks,

I am deploying a mongo-sharded cluster using the bitnami mongo-sharded helm chart for Kubernetes.

The configuration is the following:

2 mongoS

2 replicas per shard, 1 arbiter

3 replicas for config servers

Mongo version: 5

Docker image: bitnami mongo-sharded - 5.0.5-debian-10-r13

We have indexes of ~60GB of RAM in the cluster, with one database per client (~100). Some are few MBs, some are few GBs.

The cluster is on using nodes of 32GB RAM and 4 CPU.

Problem: When I bumped the docker image from 5.0.5-debian-10-r0 to 5.0.5-debian-10-r13,

I got a rolling update with taking down my arbiter and my secondary for each shard. Once they got up, my primary was taken down directly as well, and updated.

I would expect the indexes to be back in RAM shortly afterwards, but no increase is noticeable if I check the memory usage.

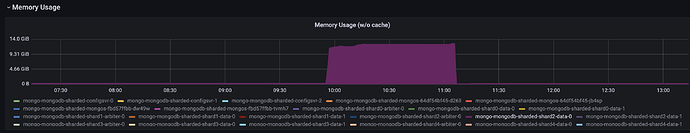

The indexes that are still present in the database if I list them. But the RAM of the pods is super low and is not increasing. See attached picture:

Description of the picture:

- yesterday, I manually re-created the indexes of one database running on one shard (after a rolling update, the indexes are not getting recreated). That’s why the memory is low.

- From 9h50 to 11h05, I manually recreated all the indexes of 1 database running on the shard. 5~10min are enough to generate the indexes.

- From 11h05 on, I start a rolling update to modify the docker image from 5.0.5-debian-10-r13 to 5.0.5-debian-10-r12. After each pod restart, the index seems “stale”. The memory doesn’t increase as it is supposed to be.

Am I missing something?

Best Regards

PS: I opened a github issue on the bitnami mongo-sharded helm chart, and one user advised me to post here as well as it may be a more general question.