Hi All,

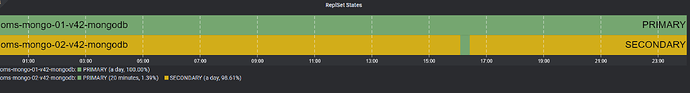

I’ve situation when my mongodb cluster having two primary in sometimes, node 1 who the real primary experiencing high load and become unresponsive causing secondary node promoted as primary but in the same time node 1 not release the status as primary causing race condition when replication back to normal.

This issue having implication to data loss in our applications, because during the race condition data is going to node 2 and when node 1 back as real primary it will updated with all the data in node 1.

I will share the picture when it primary becomes two for 20 mins.

This is the first time I’m having this kind of issue, please share the workaround if anyone has experience with this.

MongoDB Version : Community 6.0.4

OS : Rocky OS 9.3

Highly appreciated for any kind of help to solving this issue.

Regards,

Hendra