Thanks Stennie, you are very kind and sorry reply late, I am a littlt bit busy.

Please check below info,

- Mongo Version : V4.4.6

- O/S : CentOS 7.6

- Terminated : OOM , I use dmesg and get the info " Out of memory: Kill process 121839 (mongod) score 496 or sacrifice childstrong text "

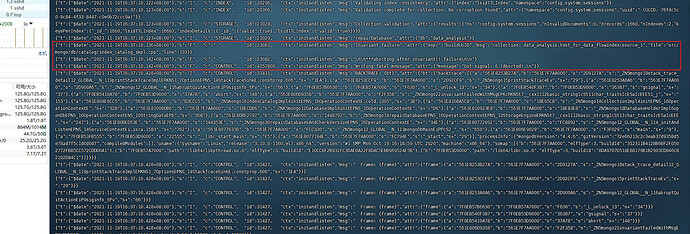

- Log Text about Test_for_data_flow :

{"t":{"$date":"2021-11-19T15:34:24.686+08:00"},"s":"I", "c":"INDEX", "id":20438, "ctx":"conn327586","msg":"Index build: registering","attr":{"buildUUID":{"uuid":{"$uuid":"820a0f1e-ed49-4b93-8c79-cab46040dafa"}},"namespace":"data_analysis.test_for_data_flow","collectionUUID":{"uuid":{"$uuid":"11c09284-614d-467f-81af-3de7a93012ff"}},"indexes":1,"firstIndex":{"name":"source_1"}}}

{"t":{"$date":"2021-11-19T15:34:27.280+08:00"},"s":"I", "c":"INDEX", "id":20384, "ctx":"IndexBuildsCoordinatorMongod-4","msg":"Index build: starting","attr":{"namespace":"data_analysis.test_for_data_flow","buildUUID":null,"properties":{"v":2,"key":{"source":1.0},"name":"source_1"},"method":"Hybrid","maxTemporaryMemoryUsageMB":200}}

- Repair Process: just enter;

mongod --dbpath /data/bk.mongo/ --directoryperdb --wiredTigerDirectoryForIndexes --repair

- Backup: I took a backup of dbPath after repairing fault.

I thought all problem is from the unexcepted shutdown.

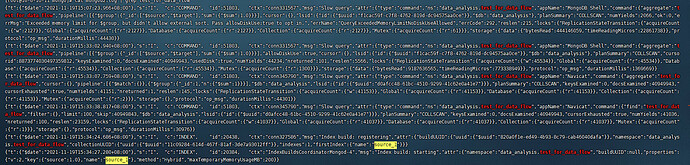

I found unexcepted shutdown again , and I restarted mongo, the log like below(i guess it is repair automatically even no --repair)

{"t":{"$date":"2021-11-24T13:47:03.969+08:00"},"s":"I", "c":"NETWORK", "id":22944, "ctx":"conn253368","msg":"Connection ended","attr":{"remote":"127.0.0.1:44132","connectionId":253368,"connectionCount":86}}

{"t":{"$date":"2021-11-24T13:54:22.251+08:00"},"s":"I", "c":"CONTROL", "id":23285, "ctx":"main","msg":"Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'"}

{"t":{"$date":"2021-11-24T13:54:22.740+08:00"},"s":"W", "c":"ASIO", "id":22601, "ctx":"main","msg":"No TransportLayer configured during NetworkInterface startup"}

{"t":{"$date":"2021-11-24T13:54:22.760+08:00"},"s":"I", "c":"NETWORK", "id":4648601, "ctx":"main","msg":"Implicit TCP FastOpen unavailable. If TCP FastOpen is required, set tcpFastOpenServer, tcpFastOpenClient, and tcpFastOpenQueueSize."}

{"t":{"$date":"2021-11-24T13:54:22.761+08:00"},"s":"I", "c":"STORAGE", "id":4615611, "ctx":"initandlisten","msg":"MongoDB starting","attr":{"pid":162546,"port":27017,"dbPath":"/data/mongodb","architecture":"64-bit","host":"10-0-16-11"}}

{"t":{"$date":"2021-11-24T13:54:22.761+08:00"},"s":"I", "c":"CONTROL", "id":23403, "ctx":"initandlisten","msg":"Build Info","attr":{"buildInfo":{"version":"4.4.6","gitVersion":"72e66213c2c3eab37d9358d5e78ad7f5c1d0d0d7","openSSLVersion":"OpenSSL 1.0.1e-fips 11 Feb 2013","modules":[],"allocator":"tcmalloc","environment":{"distmod":"rhel70","distarch":"x86_64","target_arch":"x86_64"}}}}

{"t":{"$date":"2021-11-24T13:54:22.761+08:00"},"s":"I", "c":"CONTROL", "id":51765, "ctx":"initandlisten","msg":"Operating System","attr":{"os":{"name":"CentOS Linux release 7.9.2009 (Core)","version":"Kernel 3.10.0-1160.el7.x86_64"}}}

{"t":{"$date":"2021-11-24T13:54:22.761+08:00"},"s":"I", "c":"CONTROL", "id":21951, "ctx":"initandlisten","msg":"Options set by command line","attr":{"options":{"config":"/etc/mongod.conf","net":{"bindIp":"0.0.0.0","port":27017,"unixDomainSocket":{"enabled":false}},"processManagement":{"timeZoneInfo":"/usr/share/zoneinfo"},"security":{"authorization":"enabled"},"storage":{"dbPath":"/data/mongodb","directoryPerDB":true,"journal":{"enabled":true},"wiredTiger":{"engineConfig":{"directoryForIndexes":true}}}}}}

{"t":{"$date":"2021-11-24T13:54:22.762+08:00"},"s":"W", "c":"STORAGE", "id":22271, "ctx":"initandlisten","msg":"Detected unclean shutdown - Lock file is not empty","attr":{"lockFile":"/data/mongodb/mongod.lock"}}

{"t":{"$date":"2021-11-24T13:54:22.803+08:00"},"s":"I", "c":"STORAGE", "id":22270, "ctx":"initandlisten","msg":"Storage engine to use detected by data files","attr":{"dbpath":"/data/mongodb","storageEngine":"wiredTiger"}}

{"t":{"$date":"2021-11-24T13:54:22.804+08:00"},"s":"W", "c":"STORAGE", "id":22302, "ctx":"initandlisten","msg":"Recovering data from the last clean checkpoint."}

{"t":{"$date":"2021-11-24T13:54:22.804+08:00"},"s":"I", "c":"STORAGE", "id":22315, "ctx":"initandlisten","msg":"Opening WiredTiger","attr":{"config":"create,cache_size=128357M,session_max=33000,eviction=(threads_min=4,threads_max=4),config_base=false,statistics=(fast),log=(enabled=true,archive=true,path=journal,compressor=snappy),file_manager=(close_idle_time=100000,close_scan_interval=10,close_handle_minimum=250),statistics_log=(wait=0),verbose=[recovery_progress,checkpoint_progress,compact_progress],"}}

{"t":{"$date":"2021-11-24T13:54:23.767+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733263:767777][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4585 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:24.767+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733264:767606][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4586 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:26.034+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733266:33992][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4587 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:27.347+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733267:347907][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4588 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:28.554+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733268:554838][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4589 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:29.838+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733269:838371][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4590 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:31.109+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733271:109756][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4591 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:32.324+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733272:324505][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4592 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:33.539+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733273:539825][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4593 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:34.872+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733274:872655][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4594 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:36.068+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733276:68860][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4595 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:37.276+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733277:276850][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4596 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:38.675+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733278:675409][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4597 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:40.023+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733280:23160][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4598 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:41.234+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733281:234019][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4599 through 4616"}}

{"t":{"$date":"2021-11-24T13:54:42.456+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"initandlisten","msg":"WiredTiger message","attr":{"message":"[1637733282:455999][162546:0x7f913d312bc0], txn-recover: [WT_VERB_RECOVERY_PROGRESS] Recovering log 4600 through 4616"}}

About unexpected mongod shutdown, I checked the log, mongo is killed by kernel. In our mongo.conf, we don’t set the cacheSizeGB, so we set cacheSizeGB now and wanna check the status, will it work in you exeperience ? Any more suggestions?

Thanks very much, Stennie.

Regards,

Jake