Hi I am using serverless and wanted to know:

- Is the current 1TB data limit per cluster, per database, or per collection?

- Currently, the sum of my data collections + indexes in a serverless cluster are about 150GB. However, my “dataSize” is 400GB. Does serverless charge for dataSize or actual data stored?

It the case dataSize is paid by customer, is there a way to decrease it? For our use case we don’t expect the data to go beyond 200GB. Not sure if serverless is trying to be smart on how large the cluster should be.

2 Likes

Hi @Ouwen_Huang and welcome in the MongoDB Community  !

!

The 1TB limit is per cluster. See all the other limitations here:

About the costs, you can read more about them here:

And you can also check your Billing tab in Atlas to check exactly what is counted. Don’t forget that a MongoDB Atlas cluster contains your data + the oplog + the indexes + system collections. All this need some space. If you want to reduce your data size, maybe you could consider archiving some data in the Data Lake. Sometimes a wrong data model (schema) can also lead to unnecessary data sizes.

The WiredTiger compression can mitigate this a bit but it’s not magical either.

I hope this helps a bit.

Cheers,

Maxime.

Hi @MaBeuLux88_xxx,

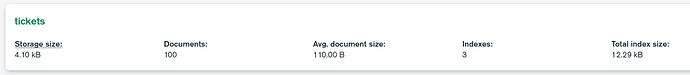

Thanks for the quick response! I am trying to debug why the storage size is so large (4x the underlying collection). Datasize was about 400GB before and after a couple small <1GB indexes were created. The oplog + system collections should be using the defaults.

I’ve also tried using the compact command on my collections via the mongosh but it doesn’t seem to have affected the size.

No idea without diving in the data.

Check the data sizes with MongoDB Compass maybe?

The UI gives a few informations about the collections & docs.

Check the oplog sizes as well with rs.printReplicationInfo().

Cheers,

Maxime.

Appreciate the debugging help, let me know if there is anything I should be doing. This is what db.stats() gives back.

{

db: 'dbname',

collections: 5,

views: 0,

objects: Long("62852233"),

avgObjSize: 6738.123068388039,

dataSize: Long("423506081077"),

storageSize: Long("104617545728"),

totalFreeStorageSize: Long("7421489152"),

numExtents: Long("0"),

indexes: 10,

indexSize: Long("15020978176"),

indexFreeStorageSize: Long("7416700928"),

fileSize: Long("0"),

nsSizeMB: 0,

ok: 1

}

rs.printReplicationInfo() gives me an error for mongos is there a different connection/command for serverless to get the replication info?

From what I see in this stats, you have 423 GB of uncompressed data stored in MongoDB and because of the compression of WiredTiger, it’s reduced to 104 GB. You also have 15 GB of indexes.

My bad about the rs.printReplicationInfo() command, it’s actually written in the Atlas Serverless Limitations link I shared earlier: there is no access to the collections in the local database.

Did you check your billing and how much data storage is billed for this cluster?

Seems like billing is for the 423GB (I’m also unsure if the 1TB compression limit applies to compressed disk use or uncompressed)

I talked with the Atlas & Serverless team and they explained to me a bit more how it works.

So, yes, Atlas is billing Serverless based on the uncompressed size of your BSON docs + the indexes. The idea of billing on the uncompressed data rather than on the compressed data is that the final price doesn’t depend on the performances of the compression algorithm that WiredTiger is using. So it’s always fair and wouldn’t change if we update the compression algorithm in the future.

If Atlas Serverless was billing on the compressed size, it would be x4 or x5 more expensive so it would seem that it’s less competitive and it would be less predictable as the compression can be more or less performant depending on the schema design you are using, the field types, etc. So it would be more complicated to predict your serverless costs in advance & plan ahead your spendings.

Finally, the 1TB storage limitation is based on the collection + indexes data compressed. It is expected that users would migrate to Atlas Dedicated clusters if they come close to the limit. Eventually in the future, the team wants to push this limit up or completely remove it.

I hope this makes sense and is helpful  !

!

Let me know if you have more questions of course!

Cheers,

Maxime.

2 Likes

@MaBeuLux88_xxx, this was very helpful. Thanks for asking on my behalf.

So far I’m quite happy with the performance of mongo serverless, our use-case is extremely bursty, so paying for compute use is very attractive. I did notice some issues when scaling from 10GB insertion to 400GB there was some downtime which we needed to code resiliency for. I’m guessing there may have been a resource allocation trigger happening behind the curtain.

I think I understand what you mean on cost: if billed on compressed size, the storage cost would just be scaled up 4x - 5x, so its the same price just different way to view it. I would actually prefer pricing on compressed disk. “Uncompressed” data pricing encourages the customer side to design around it.

Well it’s a good thing then in my opinion because if a customer optimize their schema design, they are on the good path to awesome perfs!

Just for clarity, wouldn’t there be no price difference between using wiredtiger compression vs having compression turned off?

It could increase the number of RPU and WPU that you consume if the compression was disabled. Not completely sure about that one.

But using compression is definitely a big performance boost.

It’s also the point of serverless: you don’t need to know how it’s managed in the background  !

!

Update because the “me” of the past isn’t as smart as the new “me”.

RPU and WPU wouldn’t be affected by a disabled compression because the calculations are done before the storage engine so again, uncompressed.

1 Like

!

!

!

!