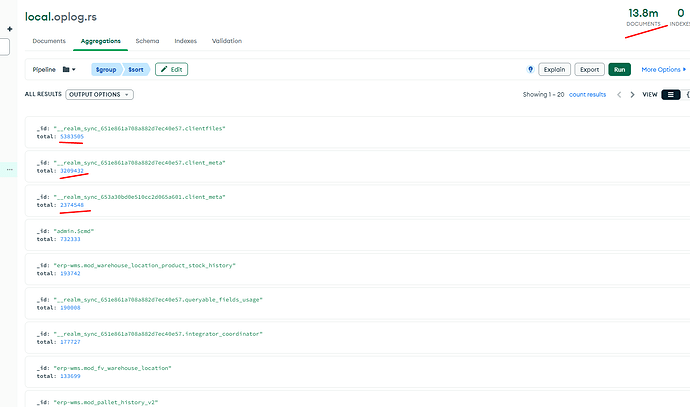

Hello, we are analyzing the oplog of our database and we see that the majority of documents that are updated are from the clientfiles and client_meta collections. Out of 13.8 million, 11 million are from these two collections. Is it necessary for the information in these collections to be updated so frequently? Can we do anything so that this can be mitigated?

Hi, those are each updated generally for every “changeset” processed. So its possible you could change your application to pack more modifications into a single changeset (a single realm.Write()) and that would force the Atlas Device Sync server to process all of the writes in one single integration (and thus update those just once). There is a downside to packing too much into a single transaction though, so I would use your best judgement on how much to put in there.

As a side-note, these writes are incredibly small and cheap, and while I would expect there to be a lot of entries in the oplog, I would expect the percentage of the size of the oplog to be much much smaller.

Best,

Tyler

So, if I understood you correctly, every time we save a transaction, all users, when they process that change, will make an entry in client_meta and clientfiles. That’s why, despite having 227,000 changes in the history in 24 hours in the oplog, we have 5.4 million clientfiles.

Now I have more doubts, if the change occurs in a document that is not within the client’s subscription (either due to a filter on the client or in the app service), should a commit of this document also be made?

What is the purpose of each client saving the “changeset” it has processed in the database? Can’t it commit this with less frequency? I thought that the file saved on the client already contained information about which changeset it has processed and it already knows how to tell the server where to continue. It seems as if it’s for statistical use, but regardless of whether the oplog is used a lot or a little, which represents 80% of the cluster’s inserts, we are concerned.

Sorry, just to be clear, those documents are updated on each upload being processed (not inserted). The number of client_file documents is equal to the number of unique realms that are opened (IE, every client that connects and syncs data). When that client uploads data, we update a progress field on its client_file document in order to transactional guarantee we never re-upload that changeset (this can easily happen if the client were to disconnect and reconnect to another server before it has had its upload acknowledged). This document is also updated each time the client connects to the server.

The number of client_meta documents is equal to either (a) the number of partitions if you are using partition sync or (b) a constant of 32 or 64 (depending on cluster size) if you are using flexible sync. This document is updated each time a change is applied to MongoDB.

So, you do not have 5 million clientfiles if I understand correctly, you/we just have updated those documents 5 million times (and each of those updates should be quite small)

As for your question about why the server needs to keep this information, the issue is that the client and the server might not always agree on what has been processed.

In the ideal situation, this is what happens:

- Client makes a write and uploads it to the server

- Sever processes the upload and in a following download message to the client will acknowledge that the server processed the most recent write (we use per-client versioning semantics)

- Client and the server have the same view of what has been successfully uploaded

Now, when you inject network issues, this is what can happen

- Client makes a write and uploads it to the server

- Server processes the upload and commits it, it tries to send an ACK to the client but it has disconnected

- The client reconnects to the server (perhaps after some time). It is not sure if the server has seen the write it previously uploaded (never received the ACK), so it re-uploads it

- The server needs to know if it has processed this write already. This is where we use the information being updated in the client file on each upload

Let me know if that makes sense or if you have any other questions. Also, there is obviously a lot missing from this page, but I still think it might be helpful for you if you are curious: https://www.mongodb.com/docs/atlas/app-services/sync/details/protocol/#client-file-identifier

Best,

Tyler