Hey everyone.

New to MongoDB but have only heard excellent things so I am excited!

I’m using Azure Cosmos DB API for MongoDB.

My business recently required the need for recording time-series data. Specifically, temperatures (from 24 different points) every 1 to 2 seconds for 8 hours a day. AKA millions of documents. We orginially recorded to CSV format but quickly discovered timeseries and MongoDB were a much better option.

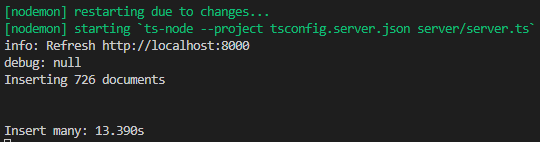

As a result of this late realization, I have about 300,000 temperature points already record to a CSV (new points are being stored directly to the database). So I need to insert these 300,000. However, my insertMany functions take an absurd amount of time. I have tried multiple POST requests with smaller chunks, different schemas, a collection for each point, all points under 1 collection etc. Nothing works. Inserting all 300,000 would take hours.

I am sure I am doing something fundamentally wrong. I can wait the hours, but seems wrong.

My insert many function:

router.post("/insert-many", async (req, res, next) => {

try {

const timeSeries = req.body;

BMTempsTimeSeries.insertMany(timeSeries)

.then((result) => {

res.status(200).json({ result });

})

.catch((error) => {

res.status(200).json({ error });

});

} catch (err) {

next(err);

}

});

My model with each temperature channel:

import mongoose from "mongoose";

const mongoSchema = new mongoose.Schema(

{

timestamp: String,

A1: String,

A2: String,

A3: String,

A4: String,

A5: String,

A6: String,

A7: String,

A8: String,

A9: String,

A10: String,

A11: String,

A12: String,

B1: String,

B2: String,

B3: String,

B4: String,

B5: String,

B6: String,

B7: String,

B8: String,

B9: String,

B10: String,

B11: String,

B12: String,

},

{

timeseries: {

timeField: "timestamp",

granularity: "seconds",

},

}

);

export const BMTempsTimeSeries = mongoose.models.BMTempsTimeSeries