Hi guys, we have a collection in MongoDB Atlas with 3 million documents and using NodeJS we need to export them to CSV. We cannot use MONGOEXPORT or MONGODUMP, it is a process that must be developed as an API.

For this, we are working with the fast-csv library, but we have the problem that we must pass an array to the fastcsv.write() method as input data for the creation of the CSV.

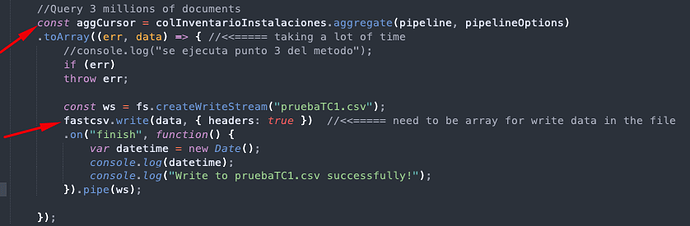

The problem is that the transformation to an array of the 3 million documents returned by the query to Mongo is consuming a lot of time and memory.

Could you give us a hand to know how to develop this for it works in the most efficient way possible?

Here is a sample of the code we are testing.

P.D. Questions that can also help us:

- Do you know of any library or way to do this more efficiently?

- Is there a way to NOT have to convert the data returned in the query to an array so that it is written to the CSV file?

Thank you.