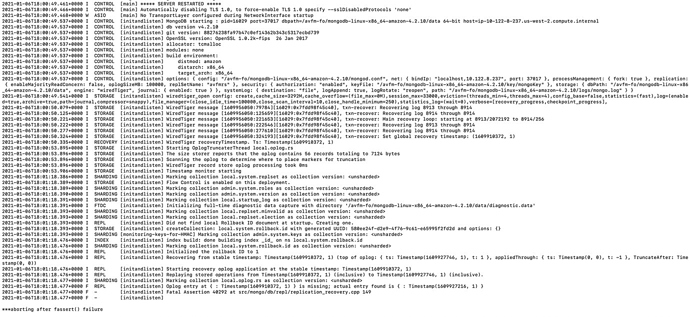

Facing following error while configuring the replica set.

2021-01-06T10:09:12.674+0000 I CONTROL [main] ***** SERVER RESTARTED *****

2021-01-06T10:09:12.679+0000 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'

2021-01-06T10:09:12.681+0000 W ASIO [main] No TransportLayer configured during NetworkInterface startup

2021-01-06T10:09:12.750+0000 I CONTROL [initandlisten] MongoDB starting : pid=14056 port=37017 dbpath=/avfm-fo/mongodb-linux-x86_64-amazon-4.2.10/data 64-bit host=ip-10-122-8-237.us-west-2.compute.internal

2021-01-06T10:09:12.750+0000 I CONTROL [initandlisten] db version v4.2.10

2021-01-06T10:09:12.750+0000 I CONTROL [initandlisten] git version: 88276238fa97b47c0ef14362b343c5317ecbd739

2021-01-06T10:09:12.750+0000 I CONTROL [initandlisten] OpenSSL version: OpenSSL 1.0.2k-fips 26 Jan 2017

2021-01-06T10:09:12.750+0000 I CONTROL [initandlisten] allocator: tcmalloc

2021-01-06T10:09:12.750+0000 I CONTROL [initandlisten] modules: none

2021-01-06T10:09:12.750+0000 I CONTROL [initandlisten] build environment:

2021-01-06T10:09:12.750+0000 I CONTROL [initandlisten] distmod: amazon

2021-01-06T10:09:12.750+0000 I CONTROL [initandlisten] distarch: x86_64

2021-01-06T10:09:12.750+0000 I CONTROL [initandlisten] target_arch: x86_64

2021-01-06T10:09:12.750+0000 I CONTROL [initandlisten] options: { config: "/avfm-fo/mongodb-linux-x86_64-amazon-4.2.10/mongod.conf", net: { bindIp: "localhost,10.122.8.237", port: 37017 }, processManagement: { fork: true }, replication: { enableMajorityReadConcern: false, oplogSizeMB: 100000, replSetName: "avfmrs" }, security: { authorization: "enabled", keyFile: "/avfm-fo/mongodb-linux-x86_64-amazon-4.2.10/key/mongoKey" }, storage: { dbPath: "/avfm-fo/mongodb-linux-x86_64-amazon-4.2.10/data", engine: "wiredTiger", journal: { enabled: true } }, systemLog: { destination: "file", logAppend: true, logRotate: "reopen", path: "/avfm-fo/mongodb-linux-x86_64-amazon-4.2.10/logs/mongo.log" } }

2021-01-06T10:09:12.753+0000 I STORAGE [initandlisten] wiredtiger_open config: create,cache_size=3292M,cache_overflow=(file_max=0M),session_max=33000,eviction=(threads_min=4,threads_max=4),config_base=false,statistics=(fast),log=(enabled=true,archive=true,path=journal,compressor=snappy),file_manager=(close_idle_time=100000,close_scan_interval=10,close_handle_minimum=250),statistics_log=(wait=0),verbose=[recovery_progress,checkpoint_progress],

2021-01-06T10:09:13.318+0000 I STORAGE [initandlisten] WiredTiger message [1609927753:318114][14056:0x7fd076be1c40], txn-recover: Recovering log 8909 through 8910

2021-01-06T10:09:13.377+0000 I STORAGE [initandlisten] WiredTiger message [1609927753:377076][14056:0x7fd076be1c40], txn-recover: Recovering log 8910 through 8910

2021-01-06T10:09:13.513+0000 I STORAGE [initandlisten] WiredTiger message [1609927753:513533][14056:0x7fd076be1c40], txn-recover: Main recovery loop: starting at 8909/39040 to 8910/256

2021-01-06T10:09:13.514+0000 I STORAGE [initandlisten] WiredTiger message [1609927753:514945][14056:0x7fd076be1c40], txn-recover: Recovering log 8909 through 8910

2021-01-06T10:09:13.605+0000 I STORAGE [initandlisten] WiredTiger message [1609927753:605598][14056:0x7fd076be1c40], txn-recover: Recovering log 8910 through 8910

2021-01-06T10:09:13.662+0000 I STORAGE [initandlisten] WiredTiger message [1609927753:662821][14056:0x7fd076be1c40], txn-recover: Set global recovery timestamp: (1609910372, 1)

2021-01-06T10:09:13.676+0000 I RECOVERY [initandlisten] WiredTiger recoveryTimestamp. Ts: Timestamp(1609910372, 1)

2021-01-06T10:09:17.294+0000 I STORAGE [initandlisten] Starting OplogTruncaterThread local.oplog.rs

2021-01-06T10:09:17.294+0000 I STORAGE [initandlisten] The size storer reports that the oplog contains 56 records totaling to 7124 bytes

2021-01-06T10:09:17.294+0000 I STORAGE [initandlisten] Scanning the oplog to determine where to place markers for truncation

2021-01-06T10:09:17.295+0000 I STORAGE [initandlisten] WiredTiger record store oplog processing took 0ms

2021-01-06T10:09:17.305+0000 I STORAGE [initandlisten] Timestamp monitor starting

2021-01-06T10:09:41.883+0000 I SHARDING [initandlisten] Marking collection local.system.replset as collection version: <unsharded>

2021-01-06T10:09:41.886+0000 I STORAGE [initandlisten] Flow Control is enabled on this deployment.

2021-01-06T10:09:41.886+0000 I SHARDING [initandlisten] Marking collection admin.system.roles as collection version: <unsharded>

2021-01-06T10:09:41.887+0000 I SHARDING [initandlisten] Marking collection admin.system.version as collection version: <unsharded>

2021-01-06T10:09:41.888+0000 I SHARDING [initandlisten] Marking collection local.startup_log as collection version: <unsharded>

2021-01-06T10:09:41.889+0000 I FTDC [initandlisten] Initializing full-time diagnostic data capture with directory '/avfm-fo/mongodb-linux-x86_64-amazon-4.2.10/data/diagnostic.data'

2021-01-06T10:09:41.890+0000 I SHARDING [initandlisten] Marking collection local.replset.minvalid as collection version: <unsharded>

2021-01-06T10:09:41.890+0000 I SHARDING [initandlisten] Marking collection local.replset.election as collection version: <unsharded>

2021-01-06T10:09:41.891+0000 I REPL [initandlisten] Did not find local Rollback ID document at startup. Creating one.

2021-01-06T10:09:41.891+0000 I STORAGE [initandlisten] createCollection: local.system.rollback.id with generated UUID: fb814c0d-2acc-4b7f-b6c2-009b466da3f9 and options: {}

2021-01-06T10:09:41.891+0000 I SHARDING [monitoring-keys-for-HMAC] Marking collection admin.system.keys as collection version: <unsharded>

2021-01-06T10:09:42.033+0000 I INDEX [initandlisten] index build: done building index _id_ on ns local.system.rollback.id

2021-01-06T10:09:42.033+0000 I SHARDING [ftdc] Marking collection local.oplog.rs as collection version: <unsharded>

2021-01-06T10:09:42.033+0000 I SHARDING [initandlisten] Marking collection local.system.rollback.id as collection version: <unsharded>

2021-01-06T10:09:42.033+0000 I REPL [initandlisten] Initialized the rollback ID to 1

2021-01-06T10:09:42.034+0000 I REPL [initandlisten] Recovering from stable timestamp: Timestamp(1609910372, 1) (top of oplog: { ts: Timestamp(1609927746, 1), t: 1 }, appliedThrough: { ts: Timestamp(0, 0), t: -1 }, TruncateAfter: Timestamp(0, 0))

2021-01-06T10:09:42.034+0000 I REPL [initandlisten] Starting recovery oplog application at the stable timestamp: Timestamp(1609910372, 1)

2021-01-06T10:09:42.034+0000 I REPL [initandlisten] Replaying stored operations from Timestamp(1609910372, 1) (inclusive) to Timestamp(1609927746, 1) (inclusive).

2021-01-06T10:09:42.034+0000 F REPL [initandlisten] Oplog entry at { : Timestamp(1609910372, 1) } is missing; actual entry found is { : Timestamp(1609927216, 1) }

2021-01-06T10:09:42.034+0000 F - [initandlisten] Fatal Assertion 40292 at src/mongo/db/repl/replication_recovery.cpp 149

2021-01-06T10:09:42.034+0000 F - [initandlisten]

***aborting after fassert() failure