Hello all,

Ive set docker env for create MongoDB replication with this docker compose:

version: '3'

services:

mongo1:

hostname: mongo1

image: mongo

env_file: .env

expose:

- 27017

environment:

- MONGO_INITDB_DATABASE=${MONGO_INITDB_DATABASE}

- MONGO_INITDB_ROOT_USERNAME=${MONGO_INITDB_ROOT_USERNAME}

- MONGO_INITDB_ROOT_PASSWORD=${MONGO_INITDB_ROOT_PASSWORD}

networks:

- mongo-network

ports:

- 172.16.50.15:27017:27017

volumes:

- ./db-data1:/data/db

- ./replica.key:/etc/replica.key

restart: always

command: mongod --replSet my-mongo-set --keyFile /etc/replica.key --bind_ip_all

mongo2:

hostname: mongo2

image: mongo

env_file: .env

expose:

- 27017

environment:

- MONGO_INITDB_DATABASE=${MONGO_INITDB_DATABASE}

- MONGO_INITDB_ROOT_USERNAME=${MONGO_INITDB_ROOT_USERNAME}

- MONGO_INITDB_ROOT_PASSWORD=${MONGO_INITDB_ROOT_PASSWORD}

networks:

- mongo-network

ports:

- 172.16.50.16:27017:27017

volumes:

- ./db-data2:/data/db

- ./replica.key:/etc/replica.key

restart: always

command: mongod --replSet my-mongo-set --keyFile /etc/replica.key --bind_ip_all

mongo3:

hostname: mongo3

image: mongo

env_file: .env

expose:

- 27017

environment:

- MONGO_INITDB_DATABASE=${MONGO_INITDB_DATABASE}

- MONGO_INITDB_ROOT_USERNAME=${MONGO_INITDB_ROOT_USERNAME}

- MONGO_INITDB_ROOT_PASSWORD=${MONGO_INITDB_ROOT_PASSWORD}

networks:

- mongo-network

ports:

- 172.16.50.17:27017:27017

volumes:

- ./db-data3:/data/db

- ./replica.key:/etc/replica.key

restart: always

command: mongod --replSet my-mongo-set --keyFile /etc/replica.key --bind_ip_all

mongoinit:

image: mongo

hostname: mongo

env_file: .env

networks:

- mongo-network

restart: "no"

depends_on:

- mongo1

- mongo2

- mongo3

# command: tail -F anything

command: >

mongosh --host 172.16.50.15:27017 --username ${MONGO_INITDB_ROOT_USERNAME} --password ${MONGO_INITDB_ROOT_PASSWORD} --eval

'

config = {

"_id" : "my-mongo-set",

"members" : [

{

"_id" : 0,

"host" : "172.16.50.15:27017",

"priority": 3

},

{

"_id" : 1,

"host" : "172.16.50.16:27017",

"priority": 2

},

{

"_id" : 2,

"host" : "172.16.50.17:27017",

"priority": 1

}

]

};

rs.initiate(config, { force: true });

rs.status();

'

volumes:

db-data1:

db-data2:

db-data3:

networks:

mongo-network:

driver: bridge

My env is running but i not understand why my doc is only on primary and not copy to the secondary db.

this is my rs.status() on primary

{

set: 'my-mongo-set',

date: 2023-10-26T09:29:47.471Z,

myState: 1,

term: Long("1"),

syncSourceHost: '',

syncSourceId: -1,

heartbeatIntervalMillis: Long("2000"),

majorityVoteCount: 2,

writeMajorityCount: 2,

votingMembersCount: 3,

writableVotingMembersCount: 3,

optimes: {

lastCommittedOpTime: { ts: Timestamp({ t: 1698312587, i: 1 }), t: Long("1") },

lastCommittedWallTime: 2023-10-26T09:29:47.126Z,

readConcernMajorityOpTime: { ts: Timestamp({ t: 1698312587, i: 1 }), t: Long("1") },

appliedOpTime: { ts: Timestamp({ t: 1698312587, i: 1 }), t: Long("1") },

durableOpTime: { ts: Timestamp({ t: 1698312587, i: 1 }), t: Long("1") },

lastAppliedWallTime: 2023-10-26T09:29:47.126Z,

lastDurableWallTime: 2023-10-26T09:29:47.126Z

},

lastStableRecoveryTimestamp: Timestamp({ t: 1698312557, i: 1 }),

electionCandidateMetrics: {

lastElectionReason: 'electionTimeout',

lastElectionDate: 2023-10-26T09:22:36.919Z,

electionTerm: Long("1"),

lastCommittedOpTimeAtElection: { ts: Timestamp({ t: 1698312145, i: 1 }), t: Long("-1") },

lastSeenOpTimeAtElection: { ts: Timestamp({ t: 1698312145, i: 1 }), t: Long("-1") },

numVotesNeeded: 2,

priorityAtElection: 3,

electionTimeoutMillis: Long("10000"),

numCatchUpOps: Long("0"),

newTermStartDate: 2023-10-26T09:22:37.059Z,

wMajorityWriteAvailabilityDate: 2023-10-26T09:22:37.674Z

},

members: [

{

_id: 0,

name: '172.16.50.15:27017',

health: 1,

state: 1,

stateStr: 'PRIMARY',

uptime: 445,

optime: [Object],

optimeDate: 2023-10-26T09:29:47.000Z,

lastAppliedWallTime: 2023-10-26T09:29:47.126Z,

lastDurableWallTime: 2023-10-26T09:29:47.126Z,

syncSourceHost: '',

syncSourceId: -1,

infoMessage: '',

electionTime: Timestamp({ t: 1698312156, i: 1 }),

electionDate: 2023-10-26T09:22:36.000Z,

configVersion: 1,

configTerm: 1,

self: true,

lastHeartbeatMessage: ''

},

{

_id: 1,

name: '172.16.50.16:27017',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 441,

optime: [Object],

optimeDurable: [Object],

optimeDate: 2023-10-26T09:29:37.000Z,

optimeDurableDate: 2023-10-26T09:29:37.000Z,

lastAppliedWallTime: 2023-10-26T09:29:47.126Z,

lastDurableWallTime: 2023-10-26T09:29:47.126Z,

lastHeartbeat: 2023-10-26T09:29:45.731Z,

lastHeartbeatRecv: 2023-10-26T09:29:46.705Z,

pingMs: Long("1"),

lastHeartbeatMessage: '',

syncSourceHost: '172.16.50.15:27017',

syncSourceId: 0,

infoMessage: '',

configVersion: 1,

configTerm: 1

},

{

_id: 2,

name: '172.16.50.17:27017',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 442,

optime: [Object],

optimeDurable: [Object],

optimeDate: 2023-10-26T09:29:37.000Z,

optimeDurableDate: 2023-10-26T09:29:37.000Z,

lastAppliedWallTime: 2023-10-26T09:29:47.126Z,

lastDurableWallTime: 2023-10-26T09:29:47.126Z,

lastHeartbeat: 2023-10-26T09:29:45.731Z,

lastHeartbeatRecv: 2023-10-26T09:29:46.706Z,

pingMs: Long("1"),

lastHeartbeatMessage: '',

syncSourceHost: '172.16.50.15:27017',

syncSourceId: 0,

infoMessage: '',

configVersion: 1,

configTerm: 1

}

],

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1698312587, i: 1 }),

signature: {

hash: Binary.createFromBase64("ib1dE3JOZYGqKad6qnbOxj2NWXo=", 0),

keyId: Long("7294195172714217475")

}

},

operationTime: Timestamp({ t: 1698312587, i: 1 })

}

inside secondary rs.status()

{

set: 'my-mongo-set',

date: 2023-10-26T09:30:03.394Z,

myState: 2,

term: Long("1"),

syncSourceHost: '172.16.50.15:27017',

syncSourceId: 0,

heartbeatIntervalMillis: Long("2000"),

majorityVoteCount: 2,

writeMajorityCount: 2,

votingMembersCount: 3,

writableVotingMembersCount: 3,

optimes: {

lastCommittedOpTime: { ts: Timestamp({ t: 1698312597, i: 1 }), t: Long("1") },

lastCommittedWallTime: 2023-10-26T09:29:57.126Z,

readConcernMajorityOpTime: { ts: Timestamp({ t: 1698312597, i: 1 }), t: Long("1") },

appliedOpTime: { ts: Timestamp({ t: 1698312597, i: 1 }), t: Long("1") },

durableOpTime: { ts: Timestamp({ t: 1698312597, i: 1 }), t: Long("1") },

lastAppliedWallTime: 2023-10-26T09:29:57.126Z,

lastDurableWallTime: 2023-10-26T09:29:57.126Z

},

lastStableRecoveryTimestamp: Timestamp({ t: 1698312577, i: 1 }),

electionParticipantMetrics: {

votedForCandidate: true,

electionTerm: Long("1"),

lastVoteDate: 2023-10-26T09:22:36.925Z,

electionCandidateMemberId: 0,

voteReason: '',

lastAppliedOpTimeAtElection: { ts: Timestamp({ t: 1698312145, i: 1 }), t: Long("-1") },

maxAppliedOpTimeInSet: { ts: Timestamp({ t: 1698312145, i: 1 }), t: Long("-1") },

priorityAtElection: 1,

newTermStartDate: 2023-10-26T09:22:37.059Z,

newTermAppliedDate: 2023-10-26T09:22:37.675Z

},

members: [

{

_id: 0,

name: '172.16.50.15:27017',

health: 1,

state: 1,

stateStr: 'PRIMARY',

uptime: 457,

optime: [Object],

optimeDurable: [Object],

optimeDate: 2023-10-26T09:29:57.000Z,

optimeDurableDate: 2023-10-26T09:29:57.000Z,

lastAppliedWallTime: 2023-10-26T09:29:57.126Z,

lastDurableWallTime: 2023-10-26T09:29:57.126Z,

lastHeartbeat: 2023-10-26T09:30:02.732Z,

lastHeartbeatRecv: 2023-10-26T09:30:01.754Z,

pingMs: Long("1"),

lastHeartbeatMessage: '',

syncSourceHost: '',

syncSourceId: -1,

infoMessage: '',

electionTime: Timestamp({ t: 1698312156, i: 1 }),

electionDate: 2023-10-26T09:22:36.000Z,

configVersion: 1,

configTerm: 1

},

{

_id: 1,

name: '172.16.50.16:27017',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 457,

optime: [Object],

optimeDurable: [Object],

optimeDate: 2023-10-26T09:29:57.000Z,

optimeDurableDate: 2023-10-26T09:29:57.000Z,

lastAppliedWallTime: 2023-10-26T09:29:57.126Z,

lastDurableWallTime: 2023-10-26T09:29:57.126Z,

lastHeartbeat: 2023-10-26T09:30:02.265Z,

lastHeartbeatRecv: 2023-10-26T09:30:02.731Z,

pingMs: Long("2"),

lastHeartbeatMessage: '',

syncSourceHost: '172.16.50.15:27017',

syncSourceId: 0,

infoMessage: '',

configVersion: 1,

configTerm: 1

},

{

_id: 2,

name: '172.16.50.17:27017',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 461,

optime: [Object],

optimeDate: 2023-10-26T09:29:57.000Z,

lastAppliedWallTime: 2023-10-26T09:29:57.126Z,

lastDurableWallTime: 2023-10-26T09:29:57.126Z,

syncSourceHost: '172.16.50.15:27017',

syncSourceId: 0,

infoMessage: '',

configVersion: 1,

configTerm: 1,

self: true,

lastHeartbeatMessage: ''

}

],

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1698312597, i: 1 }),

signature: {

hash: Binary.createFromBase64("IVAPZTToW+9cNtRIV/PiQSvQQaM=", 0),

keyId: Long("7294195172714217475")

}

},

operationTime: Timestamp({ t: 1698312597, i: 1 })

}

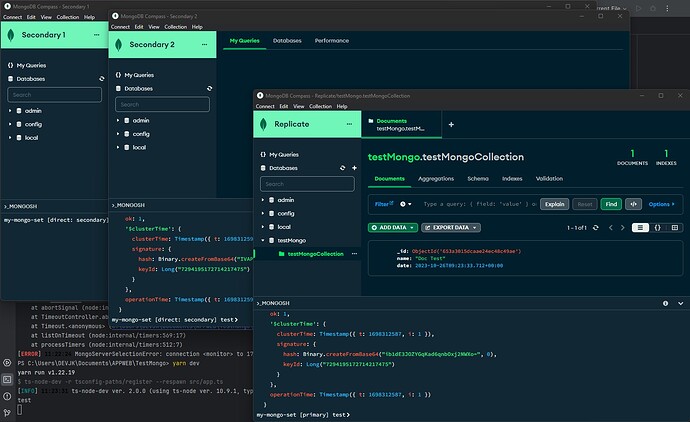

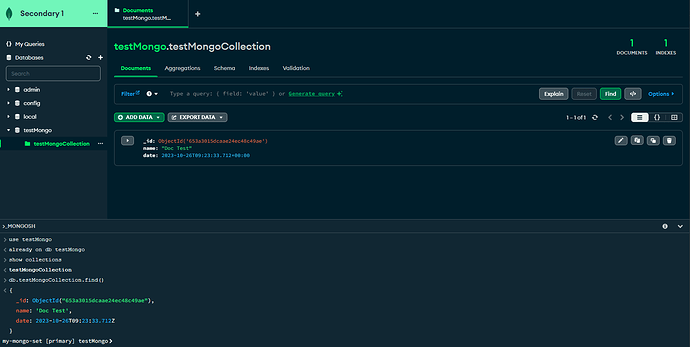

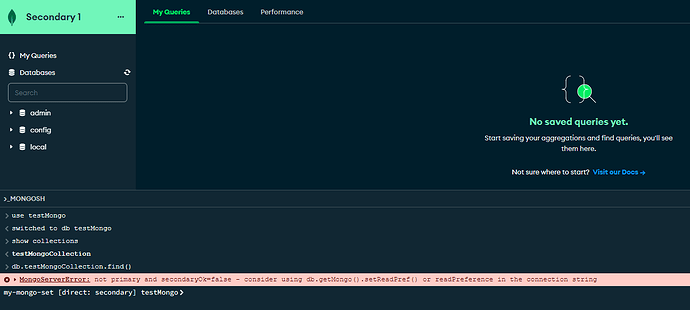

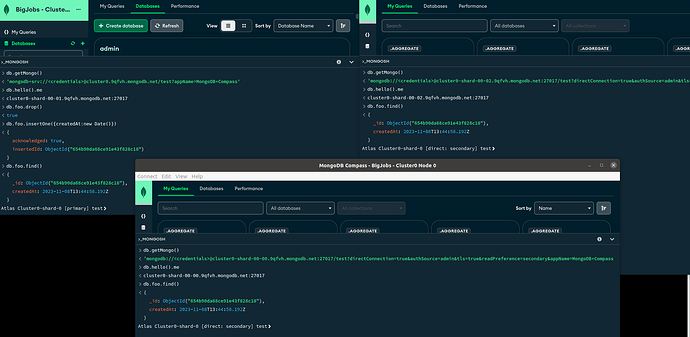

and capture screen to mongo compass :

replicate

secondary 1

secondary 2

what did i forget ?