hi,

I have a collection with nested documents array “categories”. to update a category, i do a bulk write with two operations :

- pull, to remove previous item (if any) in “categories” array

- push, to insert the updated category

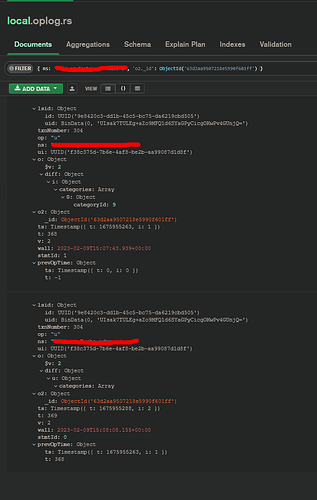

For an unknown reason, it appears that the pull has been performed after the push, despite the correct instruction (see oplog.rs screenshot).

If i’m right, the lsnid and txnNumber are equal which means they are part of the same request (the bulk write), the stmtId is in the corret order (0 for the pull, 1 for the push), but the timestamp shows that the pull has been performed after the push (25sec later !)

I don’t know if i misunderstood something or if this is a bug. Any help would be appreciated to better understand what’s going on ![]()

Edit : DB version is MongoDB 5.0.9 Community