Hello Team,

We are currently running on Mongodb Community Version 7.0.2 With 3 replica Setup.

Each Replica Has 128 GB ram, 12 CPU and 2 TB SSD.

We have 120M socket data comes from devices and we are running on Timeseries collection per day.

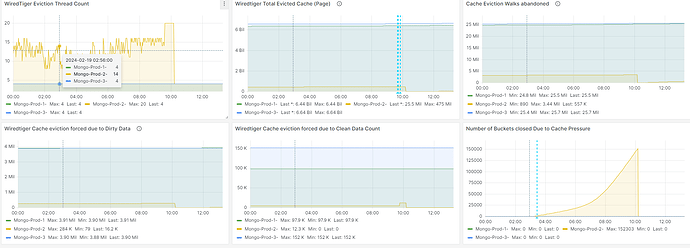

I have started getting CachePressure and unnecessary bucket termination without filling buckets properly. NumberOfBucketsClosedDueToCachePressure metric was getting bigger in time.

So Then I have restarted Mongod Instance and It gives me some buffer to investigate.

I saw some errors on logs below,

{"t":{"$date":"2024-02-19T20:13:39.063+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5442","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 1634887031 (0x61726577) is invalid. Size must be between 0 and 16793600(16MB) First element: ","file":"src/mongo/bson/bsonobj.cpp","line":104}}

{"t":{"$date":"2024-02-19T20:15:47.396+03:00"},"s":"I", "c":"CONNPOOL", "id":22572, "ctx":"MirrorMaestro","msg":"Dropping all pooled connections","attr":{"hostAndPort":"REDACTES","error":"ConnectionPoolExpired: Pool for REDACTEDhas expired."}}

{"t":{"$date":"2024-02-19T20:18:59.646+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5494","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 0 (0x0) is invalid. Size must be between 0 and 67108864(64MB) First element: EOO","file":"src/mongo/bson/bsonobj.cpp","line":104}}

{"t":{"$date":"2024-02-19T20:18:59.646+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5494","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 1919250541 (0x7265706D) is invalid. Size must be between 0 and 67108864(64MB) First element: ","file":"src/mongo/bson/bsonobj.cpp","line":104}}

{"t":{"$date":"2024-02-19T20:18:59.646+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5494","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 1701603681 (0x656C6961) is invalid. Size must be between 0 and 67108864(64MB) First element: ","file":"src/mongo/bson/bsonobj.cpp","line":104}}

{"t":{"$date":"2024-02-19T20:18:59.646+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5494","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 1701603688 (0x656C6968) is invalid. Size must be between 0 and 16793600(16MB) First element: ","file":"src/mongo/bson/bsonobj.cpp","line":104}}

{"t":{"$date":"2024-02-19T20:21:35.145+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5505","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 0 (0x0) is invalid. Size must be between 0 and 67108864(64MB) First element: EOO","file":"src/mongo/bson/bsonobj.cpp","line":104}}

{"t":{"$date":"2024-02-19T20:21:35.145+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5505","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 1919252073 (0x72657669) is invalid. Size must be between 0 and 67108864(64MB) First element: ","file":"src/mongo/bson/bsonobj.cpp","line":104}}

{"t":{"$date":"2024-02-19T20:21:35.145+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5505","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 1919252080 (0x72657670) is invalid. Size must be between 0 and 16793600(16MB) First element: ","file":"src/mongo/bson/bsonobj.cpp","line":104}}

{"t":{"$date":"2024-02-19T20:26:01.440+03:00"},"s":"I", "c":"CONNPOOL", "id":22572, "ctx":"MirrorMaestro","msg":"Dropping all pooled connections","attr":{"hostAndPort":"REDACTED","error":"ConnectionPoolExpired: Pool for REDACTED has expired."}}

{"t":{"$date":"2024-02-19T20:28:55.403+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5644","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 0 (0x0) is invalid. Size must be between 0 and 67108864(64MB) First element: EOO","file":"src/mongo/bson/bsonobj.cpp","line":104}}

{"t":{"$date":"2024-02-19T20:28:55.403+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5644","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 0 (0x0) is invalid. Size must be between 0 and 67108864(64MB) First element: ","file":"src/mongo/bson/bsonobj.cpp","line":104}}

{"t":{"$date":"2024-02-19T20:28:55.403+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5644","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 1634887024 (0x61726570) is invalid. Size must be between 0 and 67108864(64MB) First element: ","file":"src/mongo/bson/bsonobj.cpp","line":104}}

{"t":{"$date":"2024-02-19T20:28:55.403+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5644","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 1634887031 (0x61726577) is invalid. Size must be between 0 and 16793600(16MB) First element: ","file":"src/mongo/bson/bsonobj.cpp","line":104}}

{"t":{"$date":"2024-02-19T20:32:35.971+03:00"},"s":"I", "c":"CONNPOOL", "id":22572, "ctx":"MirrorMaestro","msg":"Dropping all pooled connections","attr":{"hostAndPort":"REDACTED","error":"ConnectionPoolExpired: Pool for REDACTED has expired."}}

{"t":{"$date":"2024-02-19T20:40:34.517+03:00"},"s":"E", "c":"ASSERT", "id":23077, "ctx":"conn5789","msg":"Assertion","attr":{"error":"BSONObjectTooLarge: BSONObj size: 0 (0x0) is invalid. Size must be between 0 and 67108864(64MB) First element:

So this errors occurs around every 15-20 min. But I’m not inserting any document over 16MB or anything. Servers are not actually getting any read queries yet. So I currenly only have write operations.

I’m suspecting that once I restart the instance, I have now more open buckets which are eligible to get the data. So when the data comes, It searches through more filled buckets and causing this issue. But I’m not Mongodb expert and I have no other clue how to investigate it further.

About the Cache Pressure, the details are here Please take a look if it seems related,

Any help would be appreciated.