In an effort to improve repeatability, the MongoDB Performance team set out to reduce noise on several performance test suites run on EC2 instances. At the beginning of the project, it was unclear whether our goal of running repeatable performance tests in a public cloud was achievable. Instead of debating the issue based on assumptions and beliefs, we decided to measure noise itself and see if we could make configuration changes to minimize it.

After thinking about our assumptions and the experiment setup, we began by recording data about our current setup and found no evidence of particularly good or bad EC2 instances. In the next step, we investigated IO and found that EBS instances are the stable option for us. Having found a very stable behavior as far as disks were concerned, this third and final experiment turns to tuning CPU related knobs to minimize noise from this part of the system.

Investigate CPU Options

We already built up knowledge around fine-tuning CPU options when setting up another class of performance benchmarks (single node benchmarks). That work had shown us that CPU options could also have a large impact on performance. Additionally, it left us familiar with a number of knobs and options we could adjust.

We added some CPU specific tests to measure CPU variability. These tests allow us to see if the CPU performance is noisy, independently of whether that noise makes MongoDB performance noisy. For our previous work on CPU options, we wrote some simple tests in our C++ harness that would, for example:

- multiply numbers in a loop (cpu bound)

- sleep 1 or 10 ms in a loop

- Do nothing (no-op) in the basic test loop

We added these tests to our System Performance project. We were able to run the tests on the client only, and going across the network.

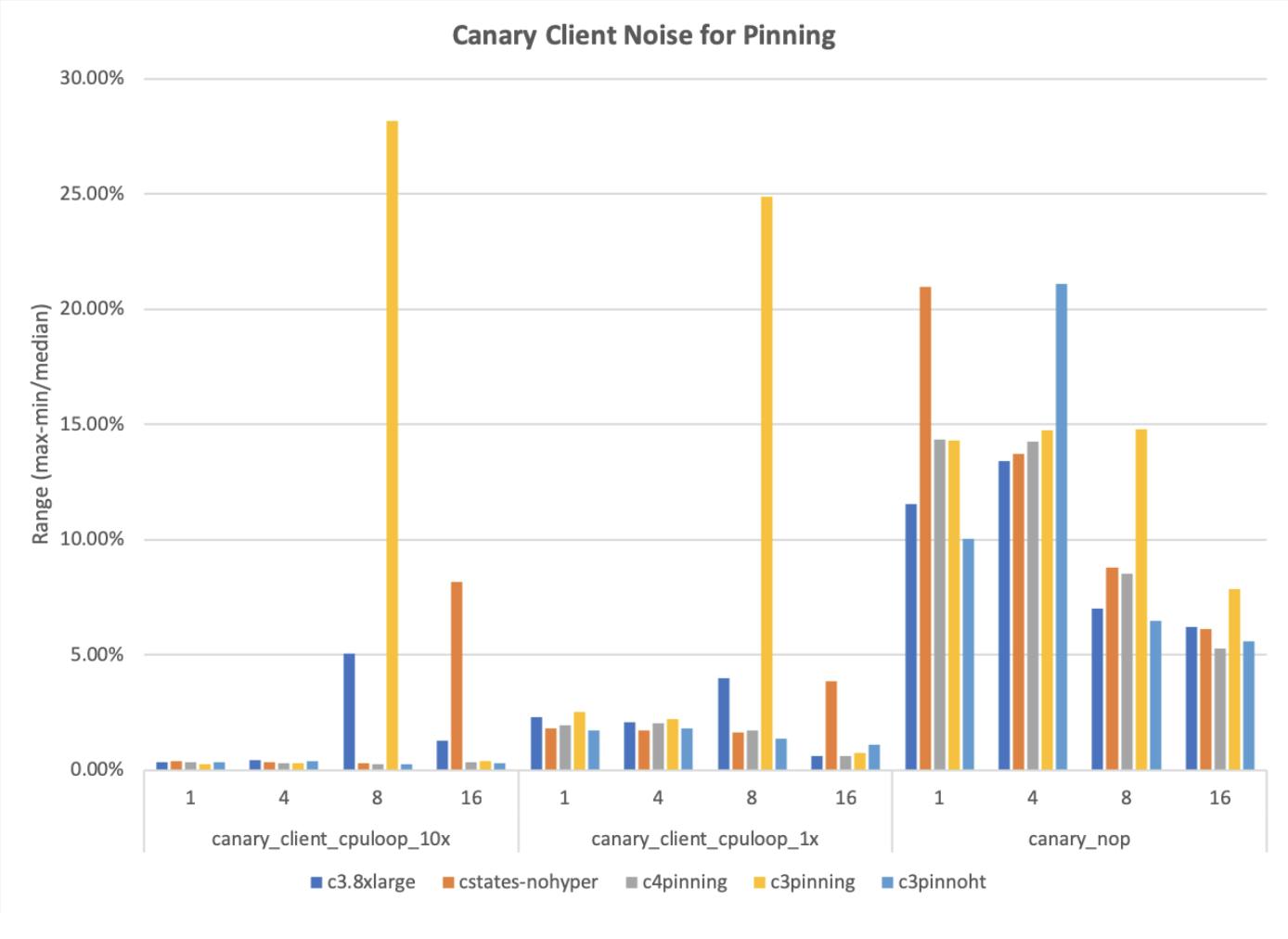

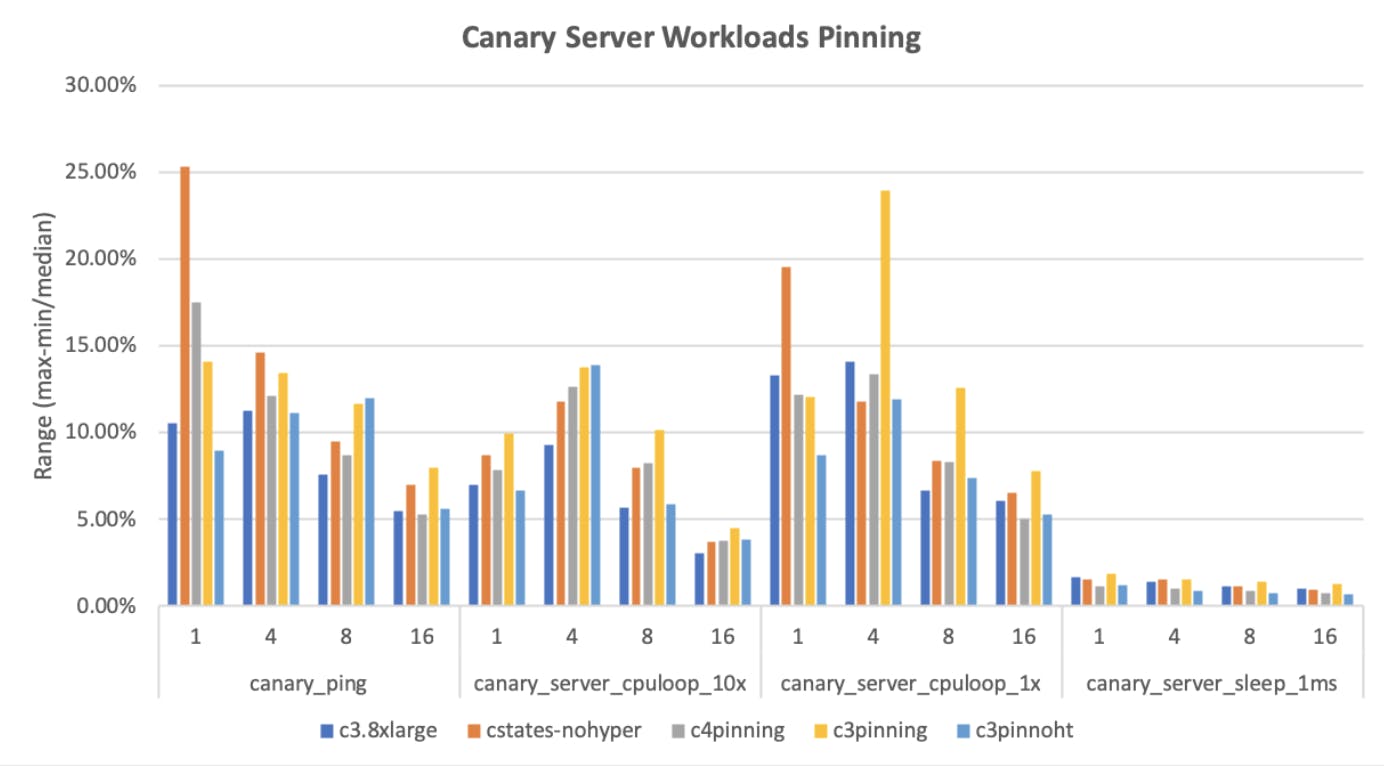

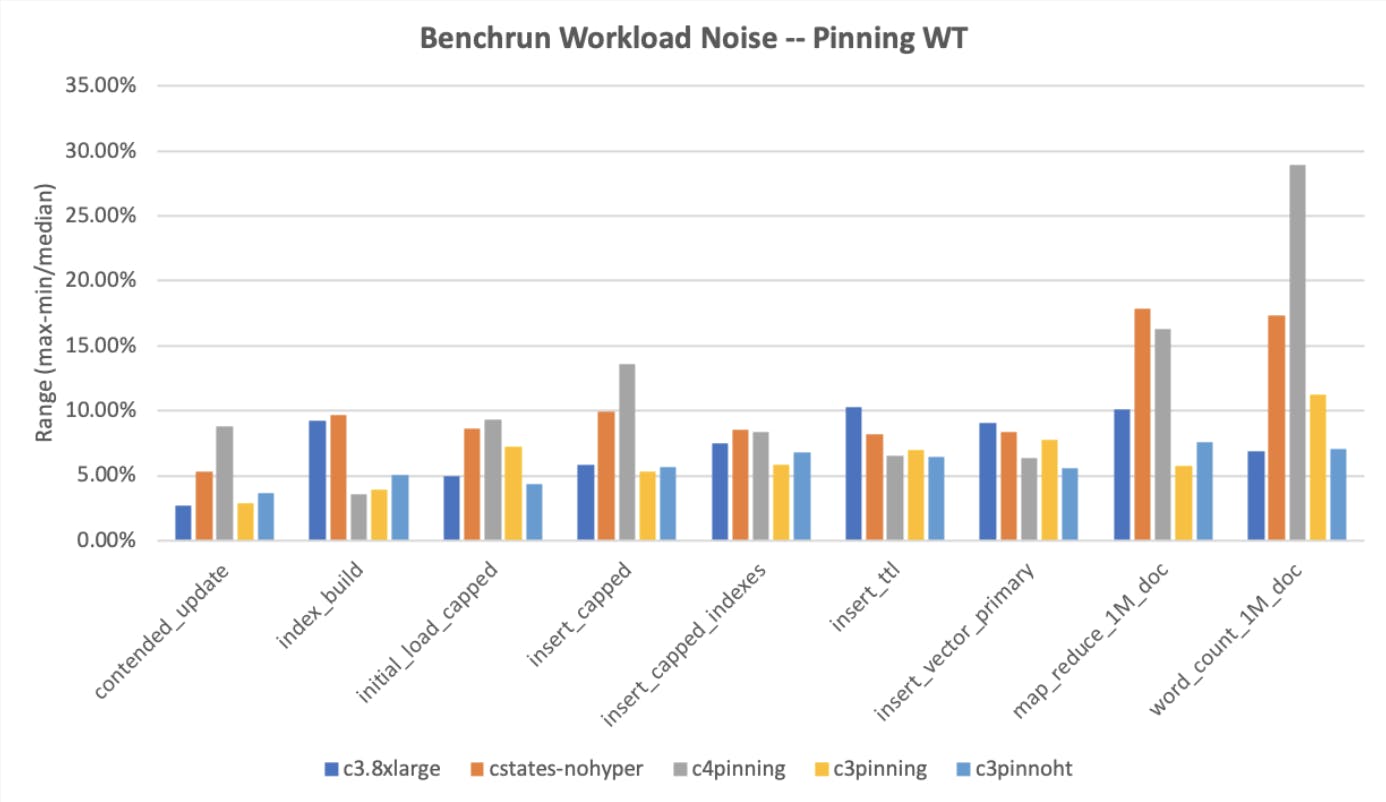

We ran our tests 5x5 times, changing one configuration at a time, and compared the results. The first two graphs below contain results for the CPU-focused benchmarks, the third contains the MongoDB-focused benchmarks. In all the below graphs, we are graphing the "noise" metric as a percentage computed from (max-min)/median and lower is better.

We start with our focused CPU tests, first on the client only, and then connecting to the server. We’ve omitted the sleep tests from the client graphs for readability, as they were essentially 0.

The nop test is the noisiest test all around, which is reasonable because it’s doing nothing in the inner loop. The cpu-bound loop is more interesting. It is low on noise for many cases, but has occasional spikes for each case, except for the case of the c3.8xlarge with all the controls on (pinned to one socket, hyperthreading off, no frequency scaling, idle=poll).

When we connect to an actual server, the tests become more realistic, but also introduce the network as a possible source of noise. In the cases in which we multiply numbers in a loop (cpuloop) or sleep in a loop (sleep), the final c3.8xlarge with all controls enabled is consistently among the lowest noise and doesn’t do badly on the ping case (no-op on the server). Do those results hold when we run our actual tests?

Yes, they do. The right-most blue bar is consistently around 5%, which is a great result! Perhaps unsurprisingly, this is the configuration where we used all of the tuning options: idle=poll, disabled hyperthreading and using only a single socket.

We continued to compare c4 and c3 instances against each other for these tests. We expected that with the c4 being a newer architecture and having more tuning options, it would achieve better results. But this was not the case, rather the c3.8xlarge continued to have the smallest range of noise. Another assumption that was wrong!

We expected that write heavy tests, such as batched inserts, would mostly benefit from the more stable IOPS on our new EBS disks, and the CPU tuning would mostly affect cpu-bound benchmarks such as map-reduce or index build. Turns out this was wrong too - for our write heavy tests, noise did not in fact predominantly come from disk.

The tuning available for CPUs has a huge effect on threads that are waiting or sleeping. The performance of threads that are actually running full speed is less affected - in those cases the CPU runs at full speed as well. Therefore, IO-heavy tests are affected a lot by CPU-tuning!

Disabling CPU options in production

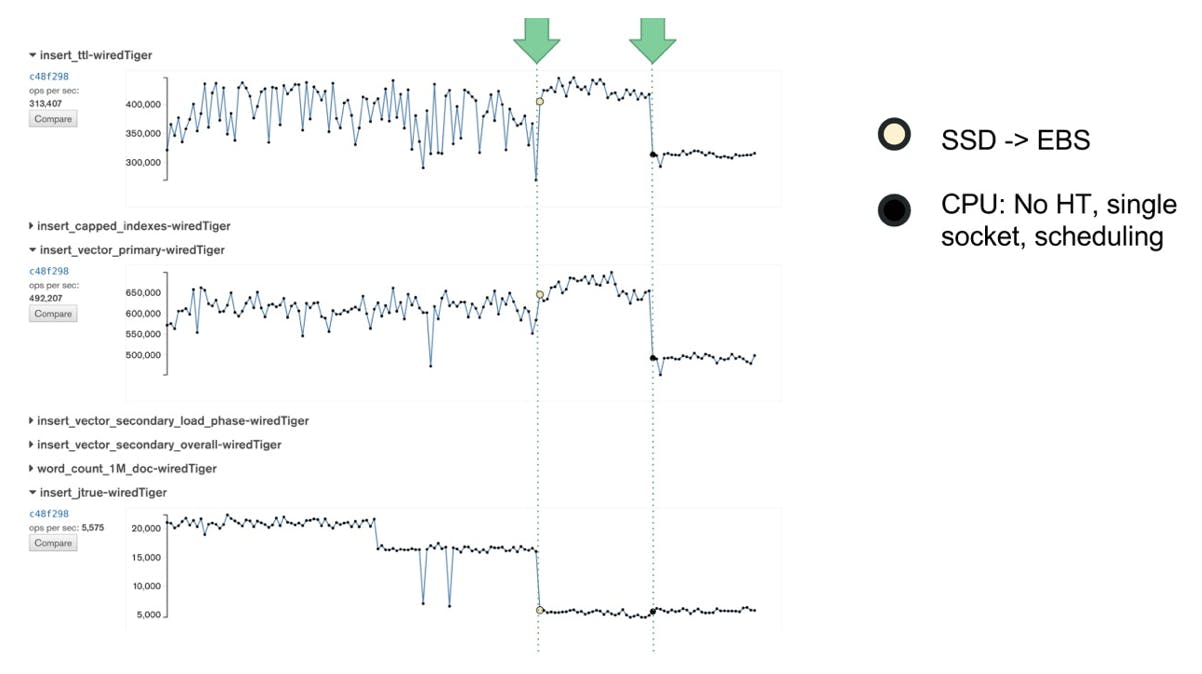

Deploying these configurations into production made insert tests even more stable from day to day:

Note that the absolute performance of some tests actually dropped, because the number of available physical CPUs dropped by ½ due to only using a single socket, and disabling hyperthreading causes a further drop, though not quite a full half, of course.

Conclusion

Drawing upon prior knowledge, we decided to fine tune CPU options. We had previously assumed that IO-heavy tests would have a lot of noise coming from disk and that CPU tuning would mostly affect CPU-bound tests. As it turns out, the tuning available for CPUs actually has a huge effect on threads that are waiting or sleeping and therefore has a huge effect on IO-heavy tests. Through CPU tuning, we achieved very repeatable results. The overall measured performance in the tests decreases but this is less important to us. We care about stable, repeatable results more than maximum performance.

This is the third and last of three bigger experiments we performed in our quest to reduce variability in performance tests on EC2 instances. You can read more about the top level setup and results as well as how we found out that EC2 instances are neither good nor bad and that EBS instances are the stable option.