Hello

Our App is an IOT Xamarin.Forms app that by design relies on notification mechanism to keep the client UI updated in real time.

To do so, we heavily rely on Realm subscription callbacks; the way we have implemented it

is the following one:

-

we have a singleton pattern which returns a unique realm instance that is used in the whole application.

-

on that instance we subscribe for multiple notification events, so when a change occurs we are able to update the UI accordingly.

-

at the end of its use every subscription is correctly disposed

Callback signature is the following one:

void Callback(IRealmCollection sender, ChangeSet error, Exception changes)

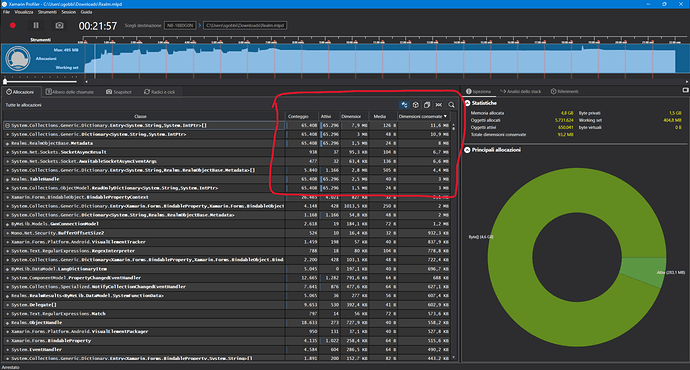

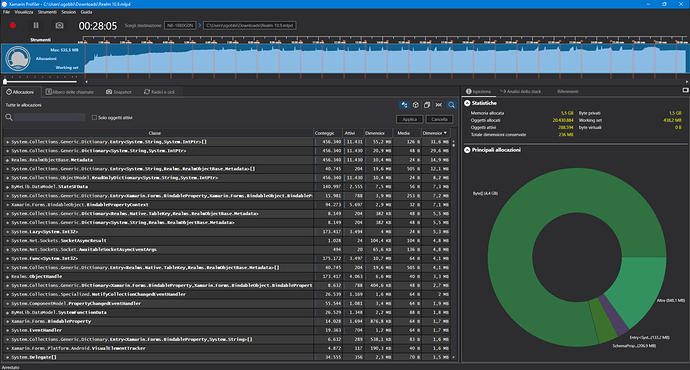

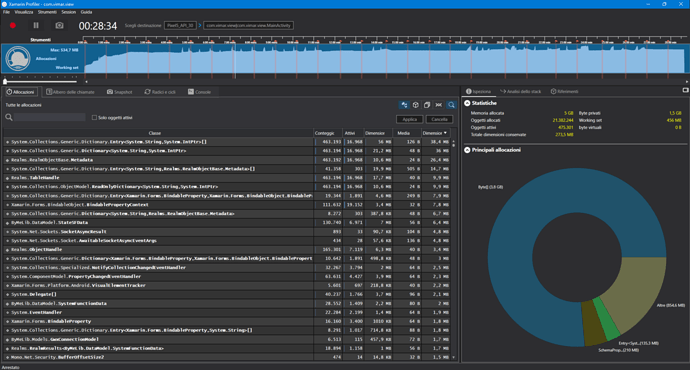

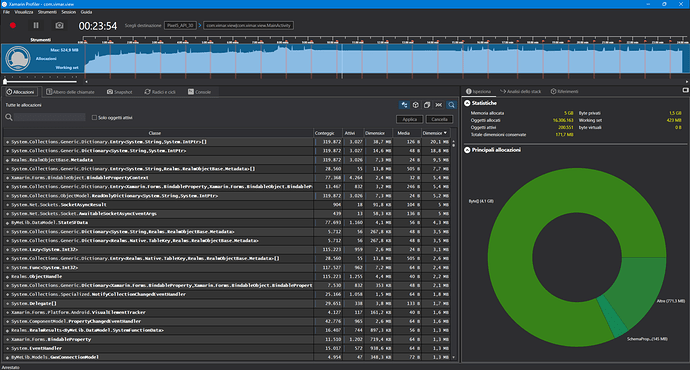

Recently we have been reported by our clients some memory leaks; to better assess the issue we have been using Xamarin Profiler,

a dedicated tool that allows an in depth memory usage analysis; after some tests, we determined that the collection of RealmObject instances

that are returned by the query we have used when we subcribed (that is passed to each subscription event as “sender” parameter)

seem not to be correctly released to be then processed by the following Garbage collector cycle, although “sender” should go out of scope as soon the subscription event ends.

If we disposed the realm instance inside our singleton class, everything seems working fine and the objects are correctly disposed;

unfortunately this approach can’t work for us, as our App as said above, needs only one realm instance in order to have a seamless notification process.

Can you please provide a feedback about this issue or have you ever been reported a similar situation?

Best regards.