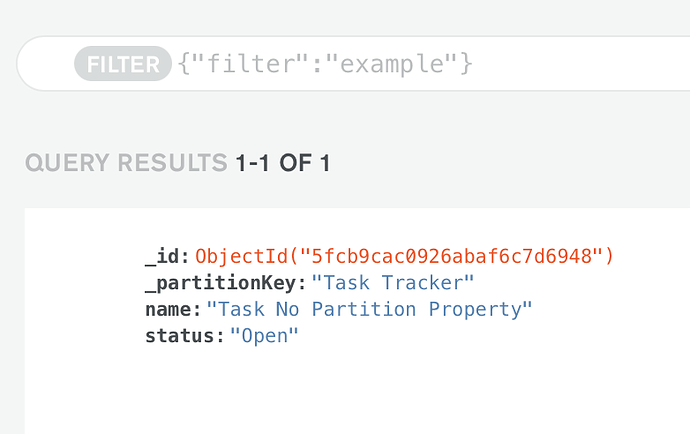

OK I have removed the system tables from the schema and now get past the schema validation and the schema is now appearing in MongoDB Atlas but there appears to be no data in Atlas.

Opening the realm and querying data from the node.js client returns data.

Any idea why data is not visible in Atlas ?

Script below:

const Realm = require('realm');

// Copy the object properties

// If it is an object then set the property to null - we update this in second cycle

// If it is a list then set the list to empty - we update these in second cycle

var copyObject = function(obj, objSchema, targetRealm) {

const copy = {};

for (var key in objSchema.properties) {

const prop = objSchema.properties[key];

if (!prop.hasOwnProperty('objectType')) {

copy[key] = obj[key];

}

else if (prop['type'] == "list") {

copy[key] = [];

}

else {

copy[key] = null;

}

}

// Add this object to the target realm

targetRealm.create(objSchema.name, copy);

}

var getMatchingObjectInOtherRealm = function(sourceObj, source_realm, target_realm, class_name) {

const allObjects = source_realm.objects(class_name);

const ndx = allObjects.indexOf(sourceObj);

// Get object on same position in target realm

return target_realm.objects(class_name)[ndx];

}

var addLinksToObject = function(sourceObj, targetObj, objSchema, source_realm, target_realm) {

for (var key in objSchema.properties) {

const prop = objSchema.properties[key];

if (prop.hasOwnProperty('objectType')) {

if (prop['type'] == "list") {

var targetList = targetObj[key];

sourceObj[key].forEach((linkedObj) => {

const obj = getMatchingObjectInOtherRealm(linkedObj, source_realm, target_realm, prop.objectType);

targetList.push(obj);

});

}

else {

// Find the position of the linked object

const linkedObj = sourceObj[key];

if (linkedObj === null) {

continue;

}

// Set link to object on same position in target realm

targetObj[key] = getMatchingObjectInOtherRealm(linkedObj, source_realm, target_realm, prop.objectType);

}

}

}

}

var copyRealm = async function(app, local_realm_path) {

// Open the local realm

const source_realm = new Realm({path: local_realm_path});

const source_realm_schema = source_realm.schema;

// Create the new realm (with same schema as the source)

var target_realm_schema = JSON.parse(JSON.stringify(source_realm_schema));

console.log("target: ", target_realm_schema)

var IX = target_realm_schema.findIndex(v => v.name === 'BindingObject')

target_realm_schema.splice(IX,1);

IX = target_realm_schema.findIndex(v => v.name === '__Class')

target_realm_schema.splice(IX,1);

IX = target_realm_schema.findIndex(v => v.name === '__Permission')

target_realm_schema.splice(IX,1);

IX = target_realm_schema.findIndex(v => v.name === '__Realm')

target_realm_schema.splice(IX,1);

IX = target_realm_schema.findIndex(v => v.name === '__Role')

target_realm_schema.splice(IX,1);

IX = target_realm_schema.findIndex(v => v.name === '__User')

target_realm_schema.splice(IX,1);

console.log("target: ", target_realm_schema)

const target_realm = await Realm.open({

schema: target_realm_schema,

sync: {

user: app.currentUser,

partitionValue: "default",

},

});

target_realm.write(() => {

// Copy all objects but ignore links for now

source_realm_schema.forEach((objSchema) => {

console.log("copying objects:", objSchema['name']);

const allObjects = source_realm.objects(objSchema['name']);

allObjects.forEach((obj) => {

copyObject(obj, objSchema, target_realm)

});

});

// Do a second pass to add links

source_realm_schema.forEach((objSchema) => {

console.log("updating links in:", objSchema['name']);

const allSourceObjects = source_realm.objects(objSchema['name']);

const allTargetObjects = target_realm.objects(objSchema['name']);

for (var i = 0; i < allSourceObjects.length; ++i) {

const sourceObject = allSourceObjects[i];

const targetObject = allTargetObjects[i];

addLinksToObject(sourceObject, targetObject, objSchema, source_realm, target_realm);

}

});

});

}

async function run() {

try {

const app = new Realm.App({ id: appId });

const credentials = Realm.Credentials.emailPassword(username, password);

await app.logIn(new Realm.Credentials.anonymous());

await copyRealm(app, source_realm_path);

} catch(error) {

console.log("Error: ", error)

}

}

run().catch(err => {

console.error("Failed to open realm:", err)

});