Hello,

I have more than 2k CSV files, I want to import them into a collection

the CSV files structure is the same (column names and number of column)

I am using MongoDB in My Windows 10 PC

Thanks

Hello,

I have more than 2k CSV files, I want to import them into a collection

the CSV files structure is the same (column names and number of column)

I am using MongoDB in My Windows 10 PC

Thanks

Hi @Osama_Al-Tahish ,

You can write a script with a loop over the files and run mongoimport in each iteration.

Thanks,

Rafael,

I tried many scripts but without results

can you help me to do that currectlly

My System: Windows 10 & MongoDB 4.4.4

Thanks @Rafael_Green

Hi,

First install the MongoDB database tools from here.

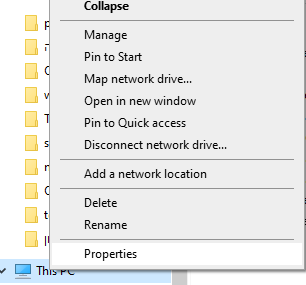

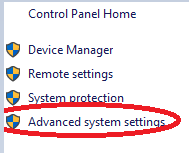

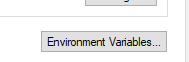

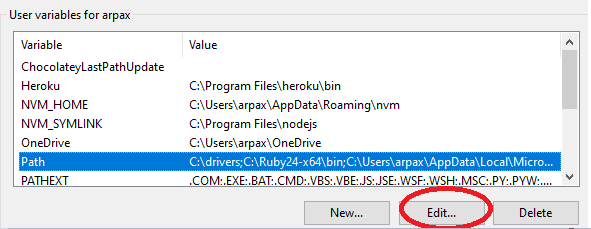

Then add “C:\Program Files\MongoDB\Tools\100\bin” to your Path environment variable like this:

3.

4.

5.

![]()

6.

![]()

7. click save

Open Windows PowerShell and run these commands:

cd myCsvFilesDirectory

$files = Get-ChildItem .

foreach ($f in $files) {

if ($f -Like "*.csv") {

mongoimport -d mydb -c mycollection --type csv --file $f --headerline

}

}

Goodluck,

Rafael,

in case you run into problems please add the error message and what you tried… @Rafael_Green’s script should do the job. Please note that you may get in to trouble when you have an unique index on the target collection.

Regards,

Michael

Thanks, It’s Working But I Have a Problem With some of my CSV Files I will solve it and get back to you

The Script Work Perfectly

I got this error with some files:

Failed: read error on entry #2578: line 2579, column 40: extraneous " in field

I think its about escape internal double-quote

Hi @Rafael_Green,

When I tried the above command on my data but all records were not inserted in DB. I have 1800+ CSV files and this file contains millions of records.

Can you please guide me with a solution?

Here is the screenshot

Thanks in advance

You have read errors on your CSV files.

The error message indicates some extra double quotes on some fields. Make sure you sanitize your input files by correcting the errors. You have the line and column numbers of each error as part of the error message. You will have to edit each file and correct the errors.

thanks for the solution but there is no other solution to upload all this data in DB. there are 1800+ CSV files and more than 23 CR of data checking all files and errors is a very untidy job.

Also, in the error report, I don’t see the file name.

The first idea that comes to me naturally in situations like this is to update the script to print the file names.

This 1800+ file is just a sample file. Actually, I have to import more than 20000 files (csv and xls file of more than 150 GB) into the database. Can you suggest anything?

How I can do this?