Hi @michael_hoeller,

Ok so I tailored a solution. I have tto say its not super stright forward but its creative and mind opening for various things.

The key ability I used is that REALM applications can be linked to Atlas Data lakes.

Now Atlas Data lakes can read/write data from/to MongoDB clusters and from/to S3 buckets. Having said that, there is a limitation that the Data lake linked can be only from the same project and the Atlas cluster linked in that Data lake can be from the same project as well.

However, the s3 buckets can be shared cross Data lakes.

In my configuration I have the following topology:

PROD

- Project “prod” with id : xxxxxxxxxx:

- Cluster “prod” with database sample_mflix and collection movies.

- A data lake mapped to the prod cluster as a Atlas store.

- S3 bucket store “atlas-datalakepavel” mapped to my S3 storage.

My Data lake configuration on prod is mapping the Atlas cluster so our realm app could read from it and use the $out operator to write to S3.

{

"databases": [

{

"name": "mydb",

"collections": [

{

"name": "movies",

"dataSources": [

{

"collection": "movies",

"database": "sample_mflix",

"storeName": "atlasClusterStore"

}

]

}

],

"stores": [

{

"provider": "s3",

"bucket": "atlas-datalakepavel",

"includeTags": false,

"name": "s3store",

"region": "us-east-1"

},

{

"provider": "atlas",

"clusterName": "prod",

"name": "atlasClusterStore",

"projectId": "xxxxxxxx"

}

]

My webhook in the prod project realm app is linked to this data lake and therefore can perform a write of sample 10 movies to my s3 store:

// This function is the webhook's request handler.

exports = async function(payload, response) {

var movies = context.services.get("data-lake").db("mydb").collection("movies");

var res = await movies.aggregate([{$sample : { size: 10}},

{"$out" : {

"s3" : {

"bucket" : "atlas-datalakepavel",

"region" : "us-east-1",

"filename" : "10movies/movies",

"format" : {

"name" : "json"

}

}

}

}]).toArray();

};

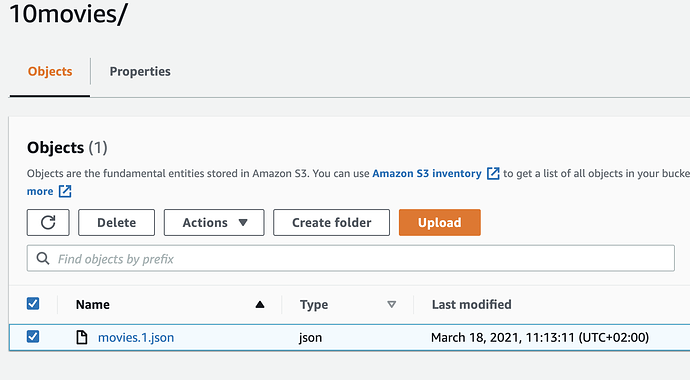

Once I execute it via a curl or a trigger or any http hook it creates my source files:

DEV

- Project “dev” with id : yyyyyyyyyyy:

- Cluster “dev” with database “dev” and collection movies.

- A data lake mapped to the dev cluster as a Atlas store.

- S3 bucket store “atlas-datalakepavel” mapped to my S3 storage.

Now I map the data lake :

{

"databases": [

{

"name": "mydb",

"collections": [

{

"name": "devMovies",

"dataSources": [

{

"path": "10movies/*",

"storeName": "s3store"

}

]

}

]

}

],

"stores": [

{

"provider": "s3",

"bucket": "atlas-datalakepavel",

"includeTags": false,

"name": "s3store",

"region": "us-east-1"

},

{

"provider": "atlas",

"clusterName": "dev",

"name": "atlasClusterStore",

"projectId": "yyyyyyy"

}

]

No I can do the opsite import via a webhook in the dev realm app connected to this datalake:

// This function is the webhook's request handler.

exports = async function(payload, response) {

var movies = context.services.get("data-lake").db("mydb").collection("devMovies");

var res = await movies.aggregate([

{

"$out": {

"atlas": {

"projectId": "yyyyyyyyyy",

"clusterName": "dev",

"db": "dev",

"coll": "movies"

}

}

}]).toArray();

};

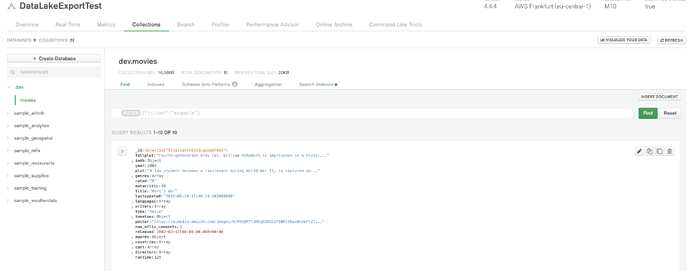

Once I do that I get the needed data in my dev:

Here you can be as flexible as you want and transfer data with logic lookups and many other options.

I know this is a lot to digest but I hope it might help.

Thanks,

Pavel