It would seem there are extreme nuanses in this product. Realm in particular.

Its been suggested that I’m doing something unusual, but I don’t know if that’s the case?

I will tell you the struggle is real though.

What I feel should be simple. Isn’t and it’s frustrating what principle I’m missing. I’m sure I am.

All I want to do is have a Collection (Sales) with a couple nested object’s, even in a list. I gave up on Sales as that’s a more involved collection. How about Products? Only two Embeded as such. This is a test harness to just read the realm.

public class Product : RealmObject

{

IList<Price> _priceList;

public Product()

{

_id = ObjectId.GenerateNewId();

_priceList = new List<Price>();

}

[BsonId]

[PrimaryKey]

[MapTo("_id")]

public ObjectId _id { get; set; }

[MapTo("_partition")]

[BsonElement("_partition")]

public string _partition { get; set; } = "6310fa126afd4bc77f5517e4";

[MapTo("ref_id")]

[BsonElement("ref_id")]

public int RefId { get; set; }

[MapTo("descript")]

[BsonElement("descript")]

public string Descript { get; set; }

/* [MapTo("prod_type")]

[BsonElement("prod_type")]

public ProductType ProdType { get; set; }

*/

[MapTo("price")]

[BsonElement("price")]

public double Price { get; set; }

[MapTo("price_list")]

[BsonElement("price_list")]

public Price[]? PriceList

{

get => _priceList.ToArray();

set

{

_priceList.Clear();

foreach (var price in value)

{

_priceList.Add(price);

}

}

}

[MapTo("active")]

[BsonElement("active")]

public bool Active { get; set; }

}

public class ProductType : EmbeddedObject

{

[MapTo("prod_type_id")]

[BsonElement("prod_type_id")]

public int ProdTypeId { get; set; }

[MapTo("type_descript")]

[BsonElement("type_descript")]

public string? TypeDescript { get; set; }

}

public class Price : EmbeddedObject

{

[MapTo("primary")]

[BsonElement("primary")]

public bool Primary { get; set; }

[MapTo("charge")]

[BsonElement("charge")]

public double? Charge { get; set; }

}

I use these same model’s to load the data (pulling from an existing DB) in another console app.

After I generated the exact same models on a client side console app to test. Which is basically this to list out the products.

try

{

var config = new AppConfiguration("nrt-product-xkyox");

_RealmAppProduct = App.Create(config);

Console.WriteLine("Test Instance generated");

var user = await _RealmAppProduct.LogInAsync(Credentials.Anonymous());

if (!user.State.Equals(UserState.LoggedIn))

Console.WriteLine($"Uer = null");

else

Console.WriteLine("Authenticated");

Console.WriteLine($"Instance of PRODUCT REALM Generated");

var _partition = "6310fa126afd4bc77f5517e4";

var partition_config = new PartitionSyncConfiguration(_partition, user);

Console.WriteLine($"Test user Generated");

_Realm_Test = await Realm.GetInstanceAsync(partition_config);

Console.WriteLine($"Waiting for download");

await _Realm_Test.SyncSession.WaitForDownloadAsync();

Console.WriteLine($"Test Downloaded");

var test = _Realm_Test.All<Product>();

foreach (var s in test)

{

Console.WriteLine(s.Descript);

}

Console.ReadLine();

// Read Sales data

}

catch (Exception ex) { Console.WriteLine($"InitializeRealm Exception\n{ex.Message}\n\n{ex.InnerException}"); }

Clearly I’m missing somthing because this just dosn’t work and I’m not clear enough to understand what’s happening? Note I commented out the ProdType property to try and simplify. That gives a worse error that makes no sense to me.

Without it I keep being told it’s made my partition key optional? Why would that be optional?

Realms.Exceptions.RealmException: The following changes cannot be made in additive-only schema mode:

- Property ‘Product._partition’ has been made optional.

If I’m trying to think logically that should be “required”? Either way. Won’t work…despite it downloading the data in the local Realm DB. It’s just inacessible I guess?

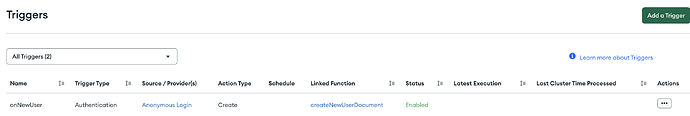

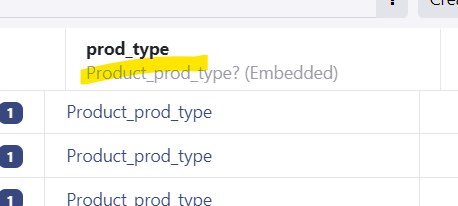

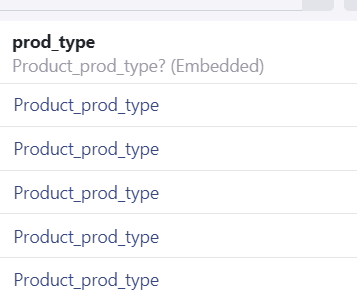

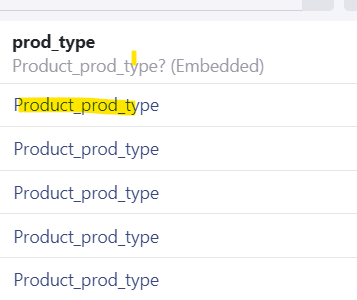

Even when you look at the Realm Studio, it’s decided to convert my obect into something else.

That was before I commented the prod_type property out.

I don’t even know what to ask yet? I was ambitious and had all my collections (Sales, Employees, Product) all set out and figured “how hard could it be”? Match up some Schema’s…hack a little and should be workable even if it takes a bit to figure it out…right? Not any further than when I started at this point and just don’t know if this thing has any stability at all. Maybe all you can do is some basic primatives and that’s it? That would be pointless.

When I had my original 3 collections set with one AppService for each - that was the plan, that was a cluster.

I thought Maybe it was because I wanted my console app (expecting some threading issues even though I loaded Nito) to use the driver and Realm in the same app? That was the “design”.

Start with batching the data into Atlas

Sync the local Realms (simple partitions to start)

Simple CRUD on the realms.

All that in a Service. (console to start dev)

Except you can’t do that. Easily. The errors are abundant and, at this point at lease, make little sense.

Can you use the same model to load Atlas and in the Realm CRUD? I realize It inherits from the RealmObject, but what’s happening in the background? Certainly loads Atlas fine, but on the other side it just fails and complains and then reports things in other object models etc…

so, I thought I’d separate the load from the realm CRUD operations as above. All that’s supposed to do is read the realm.

So, it seems Realm is doing some reflection and failing maybe because I’m not sure what it’s doing.

The expectation would have been.; monitor a data source and do operations on the REALM’s.

Not sure where/why this seems insurmountable?

So, onward!!

So, onward!!