Hi folks,

So this is a topic that is noticed to be a hot one, one that many people over think. How do you manage the database shutdown? How do you manage the daunting task of upgrading from say 4.0 to 6.0? or 4.2 to 5.0.15? The answers are actually more simplistic and easier than you realize.

This weekend (So far we’ve already moved over 1 TB of data doing this process alone successfully) I’ve been working with a megachurch and a few techie teens to conduct an upgrade/migration, at a scale they don’t even understand compared to corporate sectors and what they are actually accomplishing.

Imagine being 14 years old, and 16 years old respectfully, and literally putting on your resume that you build an enterprise grade Kubernetes/Docker infrastructure with micro services and even initiated the automation necessary to let a lot of issues self-repair. How many engineers in their 20s can say they’ve done that? That’s how easy the methods I will go over in this series are, literally can hand it over to high school kids to handle.

By Megachurch, I mean literally this branch of churches has more than 8,000 churches across north and South America. so you can imagine the data needs are extremely diverse, and very scary to think all of this was on a single Dell Poweredge R640 until today. Now it’s going across 20 Mac Studios in replicated clusters that will all house the same data from one location to the next with VPNs going to the nearest Mac Studio to connect its services.

In a future guide I will go over how to use Docker and Kubernetes to build MicroService infrastructure using Node.JS or C#, and configure servers and the like to handle a lot of what you see in enterprise infrastructures that you can simply build at home on your own laptop if you’d like. I always recommend learning how to use Git/GitHub/GitLab etc. as it’s great for pulling and storing your scripts to be accessed from anywhere.

The three methods that will be covered in this short series:

- JSON Dump and Upload - Almost Zero risk of data loss/shutdown

- Apollo GraphQL API - Almost Zero risk of data loss/shutdown

- HTTPS Service - Almost Zero risk of data loss/shutdown

For this migration after the first Mac Studio is configured and all of the data is on it, and it’s brought into production and fully up to date, we will then use an Apollo GraphQL Server and API to then send the data to other Mac Studios remotely without ever shutting down anything. Literally, zero downtime, even with this first method JSON Dump and Upload.

A JSON Dump and Upload is just the simple process of making batches of JSON files exported from your MongoDB database(s). Whether you’re “upgrading” from a lesser MongoDB database, or wanting to migrate to a new cluster, this method handles both and allows you to keep the old in production until you’re certain your new cluster is ready.

By the way, don’t tell your boss it’s this easy to do these things, you might give them a heart attack after they realized they paid so much for a third party to do these things before… Also, makes you look like “The Man” considering how “daunting” the task sounds and looks. On paper…

WHAT YOU’RE GOING TO NEED:

- Knowledge of MongoDBs CLI

- Compass

- Nintendo Switch gaming console (You’ll need this, you’ll see) Or an iPad/iPad Pro, or Android Tablet. If you’re going to use the tablet, at least 9 inch screen is recommended. I personally use an iPad Pro 11’’ with M1 myself.

- MacBook or Linux Laptop (Just personal preference, I just dislike using windows)

- Knowledge of Docker/Kubernetes and the micro services constructions

- Network drives or portable SSDs or a direct connection via network to the device hosting your new clusters. OR if it’s going to all stay on the same device, enough storage to move the JSON files (which also would be data backups as well mind you) too.

In this process we are going to decommission the R640 after the first MacStudio is setup and good to go, the following is what’s happened/happening so far:

6 Node 4.0 Cluster, 6.2TB in size, multiple collections and it’s going to be split up, which each collection (Total of about 19 or 20) will get its own 3 node sharded clusters on 6.0 (this not only “upgrades” the efficiency of the clusters, but will also upgrade to the latest MongoDB Version with zero risk), as it’s about time I think this monolithic setup gets cut down for efficiency sake.

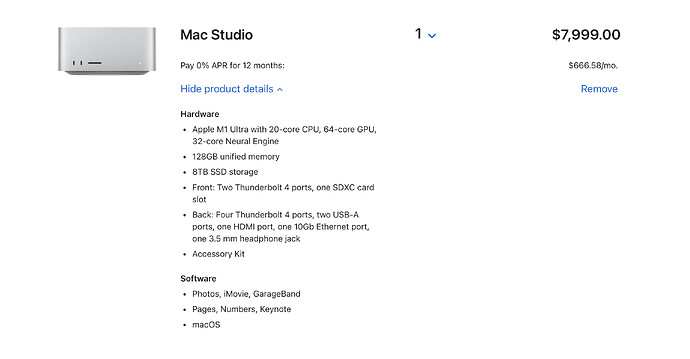

The studio has the following hardware:

And then for network failover on the 10Gb port, we also have four [https://www.amazon.com/Ethernet-Adapter-uni-Thunderbolt-Compatible/dp/B077KXY71Q/ref=pd_bxgy_img_sccl_2/143-0112208-9695823?pd_rd_w=EU6xe&content-id=amzn1.sym.6ab4eb52-6252-4ca2-a1b9-ad120350253c&pf_rd_p=6ab4eb52-6252-4ca2-a1b9-ad120350253c&pf_rd_r=5KYEEA346EMJX8M3WBMP&pd_rd_wg=mnO7l&pd_rd_r=68d7c42e-f1fb-45ce-93de-e4bcdef962cc&pd_rd_i=B077KXY71Q&psc=1](https://USB Thunderbolt to Ethernet Dongle) and all 4 are running to a 48 port switch with the 10Gb already on the Studio.

More than capable of handling this, with some room for more storage. After this is all completed, all of the other Mac Studios will be configured the same, except they will have data migrated via Apollo GraphQL services we have installed. Which I’ll cover that in another tutorial.

NOTE: Always be sure to plan out what you’re doing. Export indexes etc. and configs first to the new Docker/Kubernetes micro services and iron out any new dependencies for the 6.0 MongoDB DBs, setup their replication/shards etc. and this will go extremely smooth. So far, so smooth that after over a TB and more coming, there hasn’t been any problems at all. Just make sure all networking, firewall, and server configs are all in place and so on. (use common sense)

For information of

Fastest/Simplest/least complicated (especially perfect for teenagers, and it’s next to impossible to mess it up)

mongoexport --db <database-name> --collection <collection-name> --out output.bson

On the machine connecting to it:

mongoimport --db <database-name> --collection <collection-name> --file input.bson

This will export the collection to a .bson file, which will preserve full fidelity if you use bson types like Date and Binary. Otherwise you can replace the .bson with .json and do the same thing. In this case each file is going to its own sharded cluster (each collection to its own sharded cluster because these are big collections)

After initiating each export and you take the file over to the new cluster to ingest, this takes some time. Remember that Nintendo Switch or iPad? This is where this comes in handy.

- Power on the iPad or Switch

- If on Switch, once at the home screen, select game of choice. I prefer Pokemon Scarlett myself.

- If on tablet, connect to WiFi or Hotspot, select the YouTube application or Netflix, and pick a good movie. As these tasks can take some time. Unless you got other work to do, but in my case of doing this there isn’t much since I automated a lot of infra/architecture to just build itself as necessary. (10+ years in InfoSecDevOps)

Method 2

For over the network:

mongoexport -d <database> -c <collection_name> --out <output.json or output.bson> --pretty --host <host> --port <port> --username <user> --authenticationDatabase admin

Can use pretty to make it easier for you to read if you want to read through it to check it, note going JSON route will not allow some data types like binary and Date that aren’t supported in it, but if your BSON uses it, then recommend using BSON

The Script I haven’t shown the kids yet, because you know, I can’t make things easy all the way. This is an easy migration from on premise to on premise or on premise to atlas, or atlas to atlas…. Hint… (You’re welcome)

Method 3, the Node.JS Script:

#!/usr/bin/env node

import { MongoClient } from 'mongodb';

import { spawn } from 'child_process';

import fs from 'fs';

const DB_URI = 'mongodb://0.0.0.0:27017';

const DB_NAME = 'DB name goes here';

const OUTPUT_DIR = 'directory output goes here';

const client = new MongoClient(DB_URI);

async function run() {

try {

await client.connect();

const db = client.db(DB_NAME);

const collections = await db.collections();

if (!fs.existsSync(OUTPUT_DIR)) {

fs.mkdirSync(OUTPUT_DIR);

}

collections.forEach(async (c) => {

const name = c.collectionName;

await spawn('mongoexport', [

'--db',

DB_NAME,

'--collection',

name,

'--jsonArray',

'--pretty',

`--out=./${OUTPUT_DIR}/${name}.json`,

]);

});

} finally {

await client.close();

console.log(`DB Data for ${DB_NAME} has been written to ./${OUTPUT_DIR}/`);

}

}

run().catch(console.dir);

I’ll add the GraphQL instructions and methods for installation to setup on prem to migrating data from on prem to Atlas, or on prem to on prem, or how to show off to MBD Product managers and go from Atlas to AWS or AWS to Atlas.

I’ll also add an HTTPS service instructions and methods guide as well.

I just hope this simplifies and makes your migrations and upgrades a lot less daunting and dramatic.