Demystifying Sharding with MongoDB

Sharding is a critical part of modern databases, yet it is also one of the most complex and least understood. At MongoDB World 2022, sharding software engineer Sanika Phanse presented Demystifying Sharding in MongoDB, a brief but comprehensive overview of the mechanics behind sharding.

Read on to learn about why sharding is necessary, how it is executed, and how you can optimize the sharding process for faster queries.

Watch this deep-dive presentation on the ins and outs of sharding, featuring MongoDB sharding software engineer Sanika Phanse.

What is sharding, and how does it work?

In MongoDB Atlas, sharding is a way to horizontally scale storage and workloads in the face of increased demand — splitting them across multiple machines. In contrast, vertical scaling requires the addition of more physical hardware, for example, in the form of servers or components like CPUs or RAM.

Once you’ve hit the capacity of what your servers can support, sharding becomes your solution. Past a certain point, vertical scaling requires teams to spend significantly more time and money to keep pace with demand. Sharding, however, spreads data and traffic across your servers, so it’s not subject to the same physical limitations.

Theoretically, sharding could enable you to scale infinitesimally, but, in practice, you are scaling proportionally to the number of servers you add. Each additional shard increases both storage and throughput, so your servers can simultaneously store more data and process more queries.

How do you distribute data and workloads across shards?

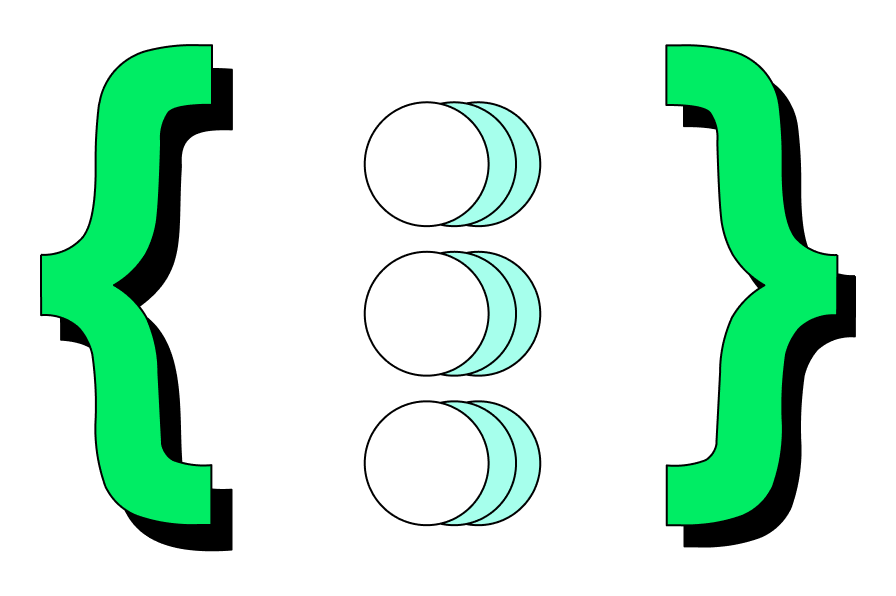

At a high level, sharding data storage is straightforward. First, a user must specify a shard key, or a subset of fields to partition their data by. Then, data is migrated across shards by a background process called the balancer, which ensures that each shard contains roughly the same amount of data.

Once you specify what your shard key will be, the balancer will do the rest. A common form of distribution is ranged sharding, which assigns data to various shards through a range of shard keys. Using this approach, one shard will contain all the data with shard keys ranging from 0-99, the next will contain 100-199, and so forth.

In theory, sharding workloads is also simple. For example, if you receive 1,000 queries per second on a single server, sharding your workload across two servers would divide the number of queries per second equally, where each server receives 500 queries per second. .

However, these ideal conditions aren’t always attainable, because workloads aren’t always evenly distributed across shards. Imagine a group of 50,000 students, whose grades are split between two shards. If half of them decide to check their grades — and all of their records happen to fall in the same shard ID range — then all their data will be stored on the same shard. As a result, all the traffic will be routed to one shard server.

Note that both of these examples are highly simplified; real-world situations are not as neat. Shards won’t always contain a balanced range of shard IDs, because data might not be evenly divided across shards. Additionally, 50,000 students, while large, is still too small of a sample size to be in a sharded cluster.

How do you map and query sharded data?

Without an elegant solution, users may encounter latency or failed queries when they try to retrieve sharded data. The challenge is to tie together all your shards, so it feels like you’re communicating with one database, rather than several.

This solution starts with the config server, which holds metadata describing the sharded cluster, as well as the most up-to-date routing table, which maps shard keys to shard connection strings. To increase efficiency, routers regularly contact the config server to create a cached copy of this routing table. Nonetheless, at any given point in time, the config server’s version of the routing table can be considered the single source of truth.

To query sharded data, your application sends your command to the team of routers. After a router picks up the command, it will then use the shard key from the command’s query, in conjunction with its cached copy of the routing table, to direct the query to the correct location. Rather than using the entire document, the user will only select one field (or combination of fields) to serve as the shard key.

Then, the query will make its way to the correct shard, execute the command, update, and return a successful result to the router.

Operations aren’t always so simple, especially when queries do not specify shard keys. In this case, the router realizes that it is unaware of where your data exists. Thus, it sends the query to all the shards, and then it waits to gather all the responses before returning to the application.

Although this specific query is slow if you have many shards, it might not pose a problem if this query is infrequent or uncommon.

How do you optimize shards for faster queries?

Shard keys are critical for seamless operations. When selecting a shard key, use a field that matches on all (or most) of your data and has a high cardinality. This step ensures granularity among shard key values, which allows the data to be distributed evenly across shards. Additionally, your data can be resharded as needed, to fit changing requirements or to improve efficiency.

Users can also accelerate queries with thoughtful planning and preparation, such as optimizing their data structures for the most common, business-critical query patterns. For example, if your workload makes lots of age-based queries and few _ID-based queries, then it might make sense to sort data by age to ensure more targeted queries.

Hospitals are good examples, as they pose unique challenges. Assuming that the hospital’s patient documents would contain fields such as insurance, _ID value, and first and last names, which of these values would make sense as a shard key?

Patient name is one possibility, but it is not unique, as many people might have the same name. Similarly, insurance can be eliminated, because there are only a handful of insurance providers, and people might not even have insurance. This key would violate both the high-cardinality principle, as well as the requirement that every document has this value filled.

The best candidate for shard key would be the patient ID number or _ID value. After all, if one patient visits, that does not indicate whether another patient will (or will not) visit. As a result, the uniqueness of the _ID value will be very useful, as it will enable users to make targeted queries to the one document that is relevant to the patient.

Faced with repeating values, users can also create compound shard keys instead. By including hyphenated versions of multiple fields, such as _ID value, patient names, and providers, a compound shard key can help reduce query bottlenecks and latency.

Ultimately, sharding is a valuable tool for any developer, as well as a cost-effective way to scale out your database capacity. Although it may seem complicated in practice, sharding (and working effectively with sharded data) can be very intuitive with MongoDB.

To learn more about sharding — and to see how you can set it up in your own environment — contact the MongoDB Professional Services team today.